If you’re starting your journey in Natural Language Processing (NLP), you’ve probably stumbled upon the term “tokenization” and wondered what all the fuss is about. What do you do when you need to explain a complex concept or topic to a beginner in that field? You naturally break it down into smaller digestible pieces. That’s exactly what tokenization does for computers trying to understand human language.

What is Tokenization?

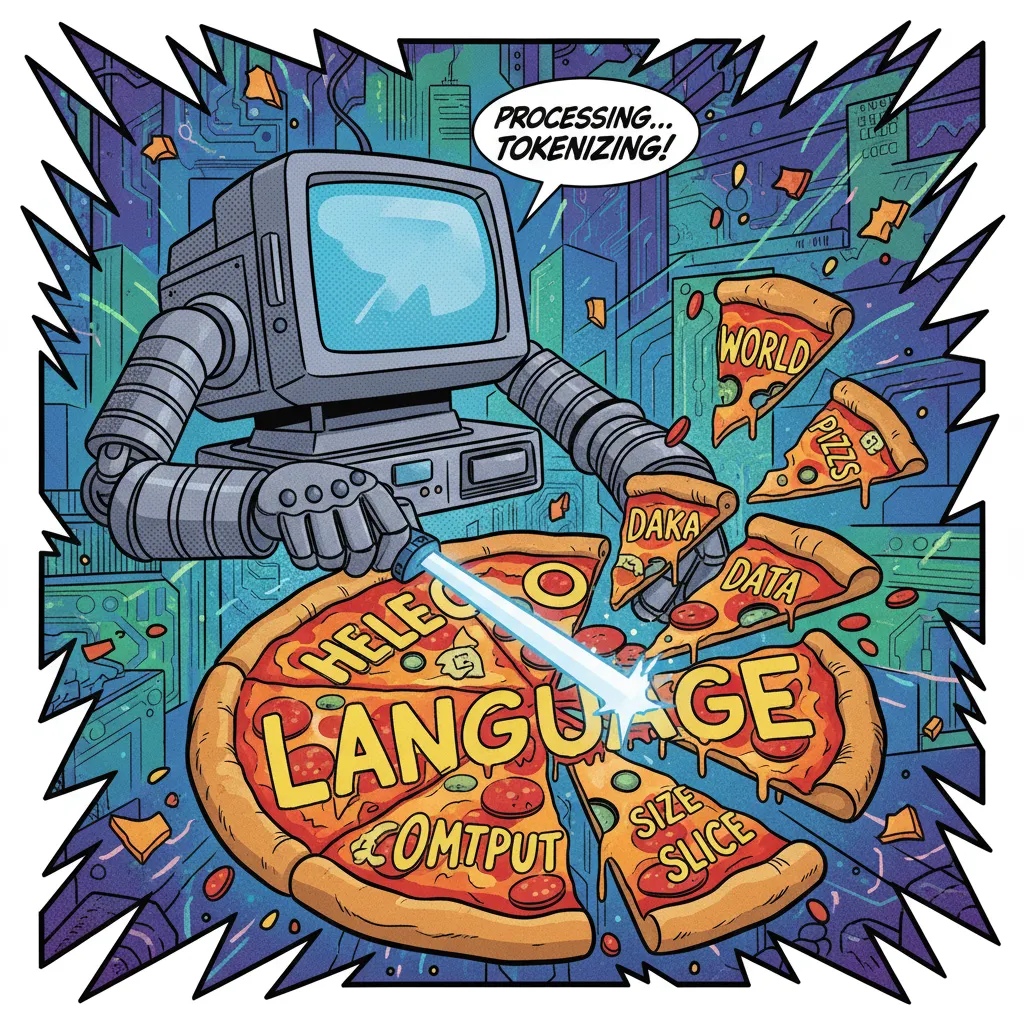

Think of tokenization like cutting a pizza into slices. You can’t eat a whole pizza in one bite, so you slice it into manageable pieces. Similarly, computers can’t process an entire paragraph or book at once, they need bite-sized pieces to work with. In technical terms, tokenization is the process of breaking down text into smaller units called tokens. These tokens become the basic building blocks that computers use to understand and process language. It’s like giving a computer a set of LEGO blocks instead of one giant, unwieldy piece of plastic.

Here’s a simple example. Take the sentence:

“The cat sat on the mat”

A tokenizer might break this into:

[“The”, “cat”, “sat”, “on”, “the”, “mat”]

Each word becomes a separate token that the computer can analyze individually.

Tokenization is a preprocessing step before inputting the data to the Transformers. If you haven’t yet understood the working of Transformers fully, check out my blog “A Beginner’s Guide to the Logic of Transformers: Powering Modern AI”. Transformer models accept only tensors as input, while your input data is probably raw text. However, you cannot directly convert your raw text into tensors, as tensors need to have a rectangular shape. This means that if you are inputting multiple sentences as a batch, all sentences need to be of equal length, which is not usually the case. You then perform a series of steps to convert the raw text into a format that can be input to the transformer model. This process is called Tokenization. Here is a step-by-step breakdown of how tokenization helps to input your data into Transformers.

Step 1: Breaking down large text into small chunks called tokens. Each token may be a word, subword, or character based on the type of tokenization process performed.

Step 2: The tokens are then encoded to their numerical representation.

Step 3: The numerically encoded tokens may also be adjusted in lengths using some processes called padding or truncation to make the length of individual sentences equal incase of multisequence inputs. This is due to the fact that tensors need to have a rectangular shape.

Step 4: Finally the numerically encoded, padded, and truncated tokens are ready to be converted to tensors. These tensors can then be input to the Transformer model.

Different Tokenization Techniques

Just like there are different ways to cut a pizza, there are several approaches to tokenization. Let me break them down for you:

1. Word Tokenization: The Classic Approach

This is the most intuitive method — splitting text at spaces and punctuation. It’s like cutting a sandwich along obvious lines.

Example:

“I love coding!” → [“I”, “love”, “coding”, “!”]

Word tokenization works great for languages like English where words are clearly separated by spaces. But here’s where it gets tricky. What about contractions like “don’t” or “can’t”? Different tokenizers handle these differently:

- Some split them: [“do”, “n’t”]

- Others keep them whole: [“don’t”]

And then there’s the vocabulary problem. Imagine trying to teach a computer every single word that exists. Not only would that be massive, but what happens when someone invents a new word or makes a typo? The system wouldn’t know what to do.

2. Character Tokenization

Character tokenization is like using a microscope on your text. Instead of words, you break everything down to individual letters and symbols:

Example:

“Hello” → [“H”, “e”, “l”, “l”, “o”]

This approach has some advantages. Typos? No problem, the system can still work with individual characters. Made-up words? Handled. But there’s a catch. Remember trying to read a book letter by letter as a kid? It’s slow and loses meaning. Computers face the same issue. Processing becomes inefficient, and understanding context gets harder.

3. Subword Tokenization

This is where things get clever. Subword tokenization finds a middle ground between words and characters. It’s like having a smart pizza cutter that knows exactly where to slice for the perfect bite size.

Example:

“unbelievable” → [“un”, “believ”, “able”]

See what happened there? The tokenizer recognized meaningful chunks. “Un” is a common prefix meaning “not,” and “able” is a common suffix. This approach keeps common words intact while breaking down rare or complex words into familiar pieces.

This is the secret sauce behind modern language models like GPT and BERT. They can handle words they’ve never seen before by understanding the pieces that make them up.

4. Sentence Tokenization: The Big Picture

Sometimes you need to zoom out and look at complete thoughts. Sentence tokenization splits text at sentence boundaries:

Example:

“I love NLP. It’s fascinating!” → [“I love NLP.”, “It’s fascinating!”]

This sounds simple until you realize that periods don’t always mean sentence endings. What about “Dr. Smith” or “U.S.A.”? Smart sentence tokenizers need to understand context to avoid silly mistakes.

The Clever Algorithms Behind Modern Tokenization

Now, let’s peek under the hood at some popular tokenization methods.

Byte-Pair Encoding (BPE)

Imagine you’re watching how people form friendships. You notice that certain people always hang out together. BPE works similarly with characters in text.

Here’s a simplified version of how it works:

- Start with individual characters as tokens

- Find the pair of tokens that appear together most often (like best friends)

- Merge them into a single token

- Repeat until you have enough tokens

For instance, if “th” appears together frequently in English text, BPE would create a “th” token. This is why models using BPE can efficiently handle common patterns while still being flexible with new words.

WordPiece

WordPiece, used by models like BERT, is like a greedy reader who always tries to grab the longest word they recognize.

When it sees “playing”:

- It looks for the longest match from the beginning: “play”

- Then handles what’s left: “ing”

- Result: [“play”, “##ing”]

The “##” prefix tells us this piece continues from the previous token. It’s like using a hyphen to show word breaks.

SentencePiece: The Universal Translator

What makes SentencePiece special is that it treats spaces just like any other character. This is brilliant for languages like Chinese or Japanese where words aren’t separated by spaces.

Instead of struggling with where words begin and end, SentencePiece treats everything uniformly. It’s like having a pizza cutter that doesn’t care about toppings, it just makes consistent cuts regardless of what’s on top.

Unigram

The Unigram model takes a different approach. It’s like a strategic game player who calculates the odds of each move.

Starting with a large vocabulary, it:

- Assigns probabilities to each token based on how often they appear

- Removes tokens that contribute least to understanding the text

- Keeps the most valuable tokens for efficient processing

This creates a balanced vocabulary that captures meaning efficiently.

Real-World Magic: Where Tokenization Comes Alive

Let me share some concrete examples of tokenization in action that you’ve probably encountered today without realizing it:

Your Morning Google Search: When you searched for “coffee shops near me,” Google didn’t just look for that exact phrase. It tokenized it into [“coffee”, “shops”, “near”, “me”] and understood you wanted nearby caffeine fixes, not coffee history.

That Helpful Customer Service Chatbot: When you typed “I can’t log into my account,” the chatbot tokenized your message to identify the problem (“can’t”, “log”, “account”) and route you to password recovery.

Your Spam Filter Hero: That suspicious email about a “limited time offer” got caught because tokenization helped identify typical spam patterns. The combination of tokens like [“limited”, “time”, “offer”, “click”, “now”] raised red flags.

Common Pitfalls and How to Dodge Them

Here’s how to avoid them:

The Punctuation Puzzle: Don’t assume punctuation should always be separate tokens. “Dr.” isn’t two tokens, and “$50” might work better as one token than two.

The Language Assumption: What works for English might fail spectacularly for other languages. German compound words, Arabic script, and Chinese characters all need different approaches.

The Context Blindness: “Apple” the fruit and “Apple” the company are the same token to basic tokenizers. More advanced systems need additional layers to understand context.

Simple Python Code

Here is some beginner-friendly code to get your hands dirty:

# Basic word tokenization with Python's split()

text = "Let's learn about tokenization!"

simple_tokens = text.split()

print(simple_tokens)

# Output: ["Let's", "learn", "about", "tokenization!"]

# Using NLTK for smarter tokenization

import nltk

from nltk.tokenize import word_tokenize

nltk.download('punkt') # Download required data

smart_tokens = word_tokenize(text)

print(smart_tokens)

# Output: ["Let", "'s", "learn", "about", "tokenization", "!"]Conclusion

Tokenization is your first step into the fascinating world of Natural Language Processing. It’s the bridge between human language and computer understanding. Whether you’re building a chatbot, analyzing customer feedback, or just curious about how Siri works, understanding tokenization gives you insight into the magic behind the scenes.

The beauty of tokenization lies in its simplicity and power. It takes the infinite complexity of human language and breaks it into manageable pieces that computers can process. And now you understand how that magic happens.

So next time you use a search engine, talk to a voice assistant, or wonder how spam filters work, you’ll know it all starts with tokenization — slicing up language into bite-sized pieces that machines can digest. Welcome to the world of NLP, where every word counts, literally!