Imagine you’re a master chef who’s spent years perfecting thousands of recipes. Now, someone asks you to specialize in making the perfect sushi. You wouldn’t forget everything you know about cooking. Instead, you’d adapt your existing skills to excel at this specific cuisine. That’s essentially what fine-tuning does for Large Language Models. It takes a model that already understands language broadly and teaches it to excel at specific tasks without starting from scratch.

This comprehensive guide is the first part of a two-part series designed to take you from complete beginner to confident practitioner in LLM fine-tuning. In this foundational installment, we’ll explore:

- The theoretical underpinnings that make fine-tuning possible,

- Demystify Parameter-Efficient Fine-Tuning (PEFT) methods, and provide deep insights into LoRA and QLoRA — the revolutionary techniques that have made AI customization accessible to everyone.

- We’ll cover the mathematics behind these methods (without overwhelming complexity), compare different approaches, and build your understanding up to the point where you’re ready for hands-on implementation.

Understanding the Foundation: What Makes Fine-Tuning Essential

Large Language Models like GPT, LLaMA, and BERT have transformed how we interact with artificial intelligence. These models, trained on vast amounts of text data, possess remarkable general language capabilities. However, while they excel at general tasks, they often struggle with specialized domains like medical diagnosis, legal document analysis, or company-specific customer service.

Think of a pre-trained LLM as a brilliant university graduate with broad knowledge but lacking industry-specific expertise. Sure, they understand language patterns and can hold conversations on various topics, but ask them to analyze a medical report or draft a legal contract, and they might falter. This gap between general capability and specialized performance is where fine-tuning becomes invaluable.

The traditional approach to fine-tuning involves updating all parameters in the model, essentially retraining every single connection in the neural network. For a model like GPT-3 with 175 billion parameters, this means adjusting billions of values, requiring massive computational resources that most organizations simply don’t have. It’s like renovating an entire skyscraper when you only need to redesign a few floors.

The Revolutionary Approach: Parameter-Efficient Fine-Tuning (PEFT)

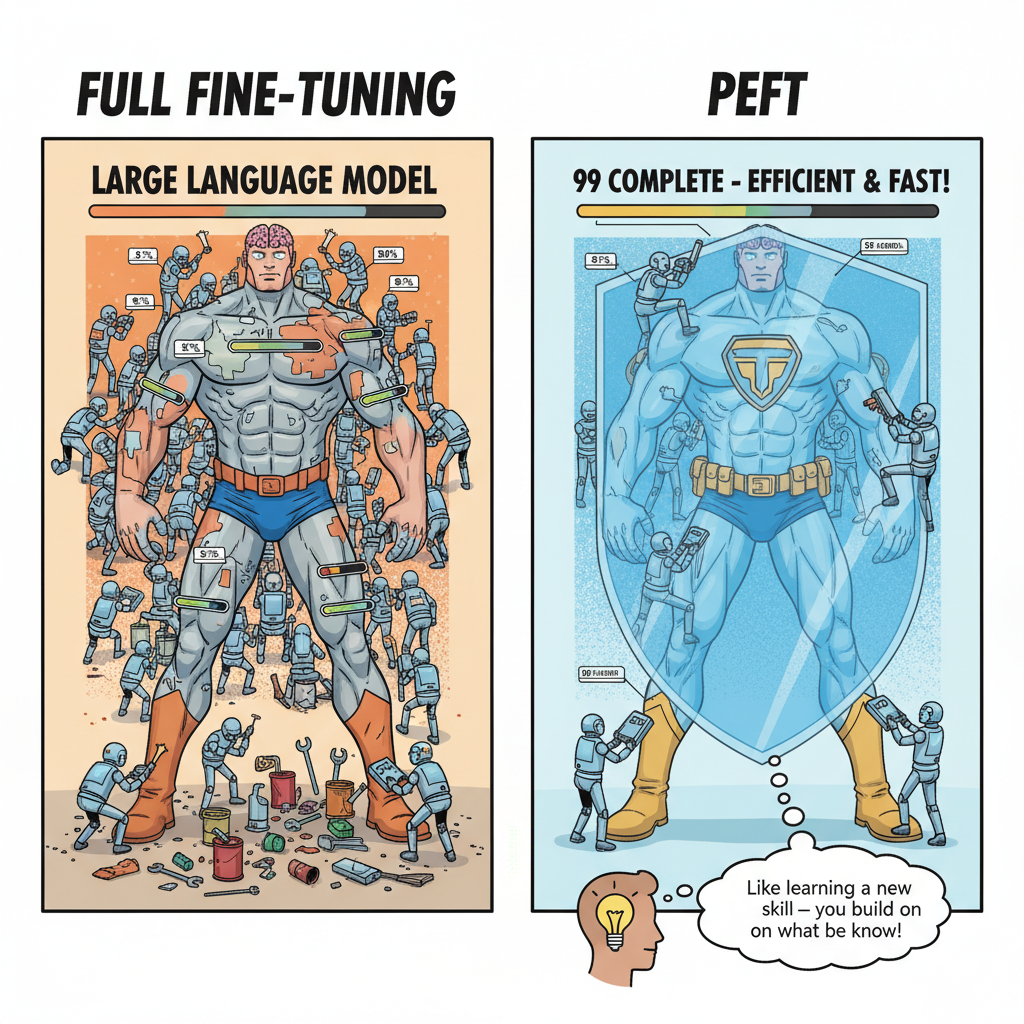

This is where Parameter-Efficient Fine-Tuning (PEFT) methods come into play, fundamentally changing how we adapt these massive models. PEFT techniques recognize that we don’t need to modify everything to achieve specialization. Instead, we can strategically update a tiny fraction of the model’s parameters while keeping the rest frozen.

The philosophy behind PEFT is elegantly simple yet powerful. When adapting a model to a new task, most of the general language understanding remains relevant. What changes are specific patterns, terminology, and response styles unique to the target domain. By identifying and updating only the parameters responsible for these adaptations, PEFT methods achieve near-identical performance to full fine-tuning while using a fraction of the resources.

Consider how you learn a new skill. You don’t relearn how to walk, talk, or think. You build upon your existing knowledge, adding specific techniques and practices. PEFT methods mirror this natural learning process, making AI adaptation more aligned with how humans acquire specialized knowledge.

Deep Dive into LoRA

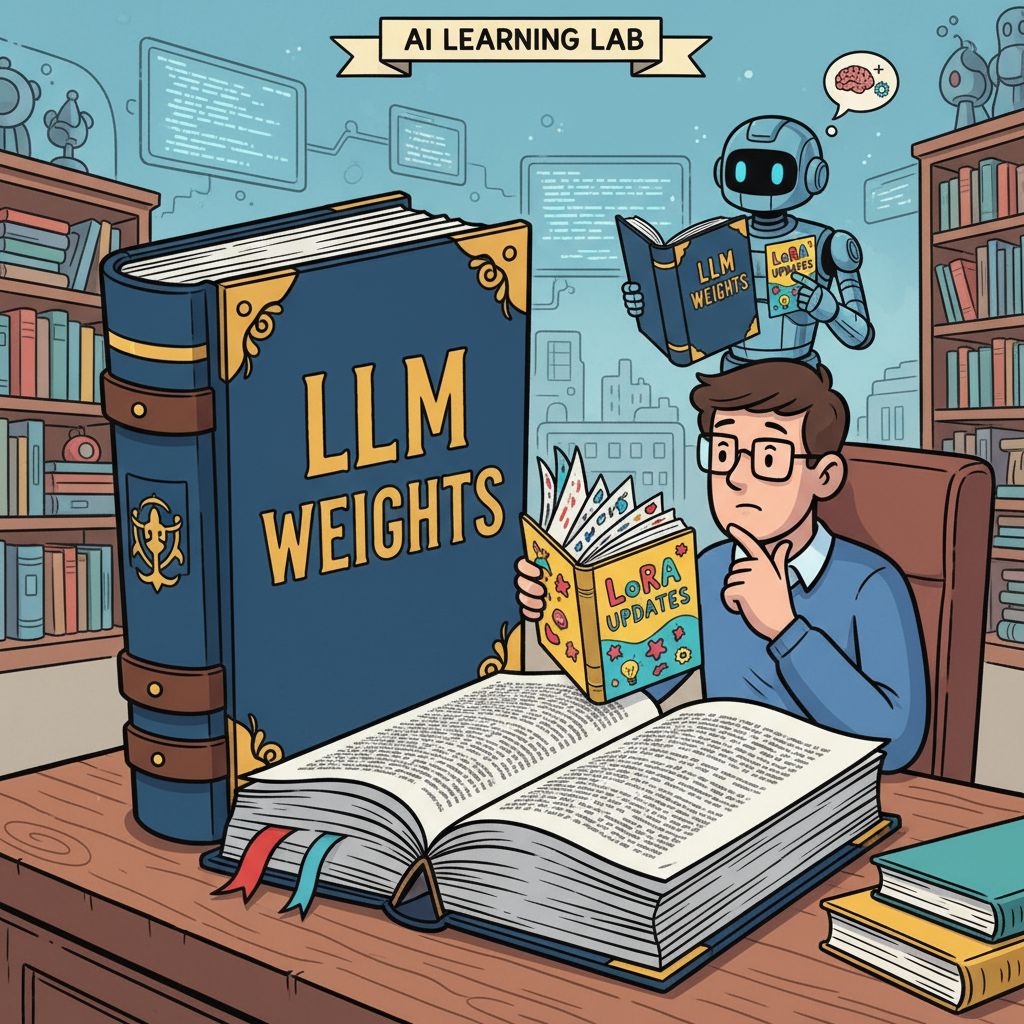

Low-Rank Adaptation, commonly known as LoRA, represents one of the most significant breakthroughs in making LLM fine-tuning accessible. To understand LoRA’s brilliance, let’s first grasp what happens during traditional fine-tuning.

When we fine-tune a model traditionally, we update massive weight matrices. Imagine spreadsheets with millions of cells, each containing a number that needs adjustment. LoRA’s key insight is that these updates don’t need to touch every cell. Instead, the meaningful changes can be captured using much smaller matrices that, when combined, approximate the full update.

Here’s an analogy that makes this clearer: imagine you’re editing a massive encyclopedia. Instead of rewriting every page, you create a thin supplementary booklet with corrections and additions. When someone reads the encyclopedia, they reference both the original and your supplement to get the updated information. That’s essentially how LoRA works. It creates small “adapter” matrices that modify the model’s behavior without changing the original weights.

The Mathematics Made Simple

Without diving too deep into linear algebra, here’s what LoRA does mathematically:

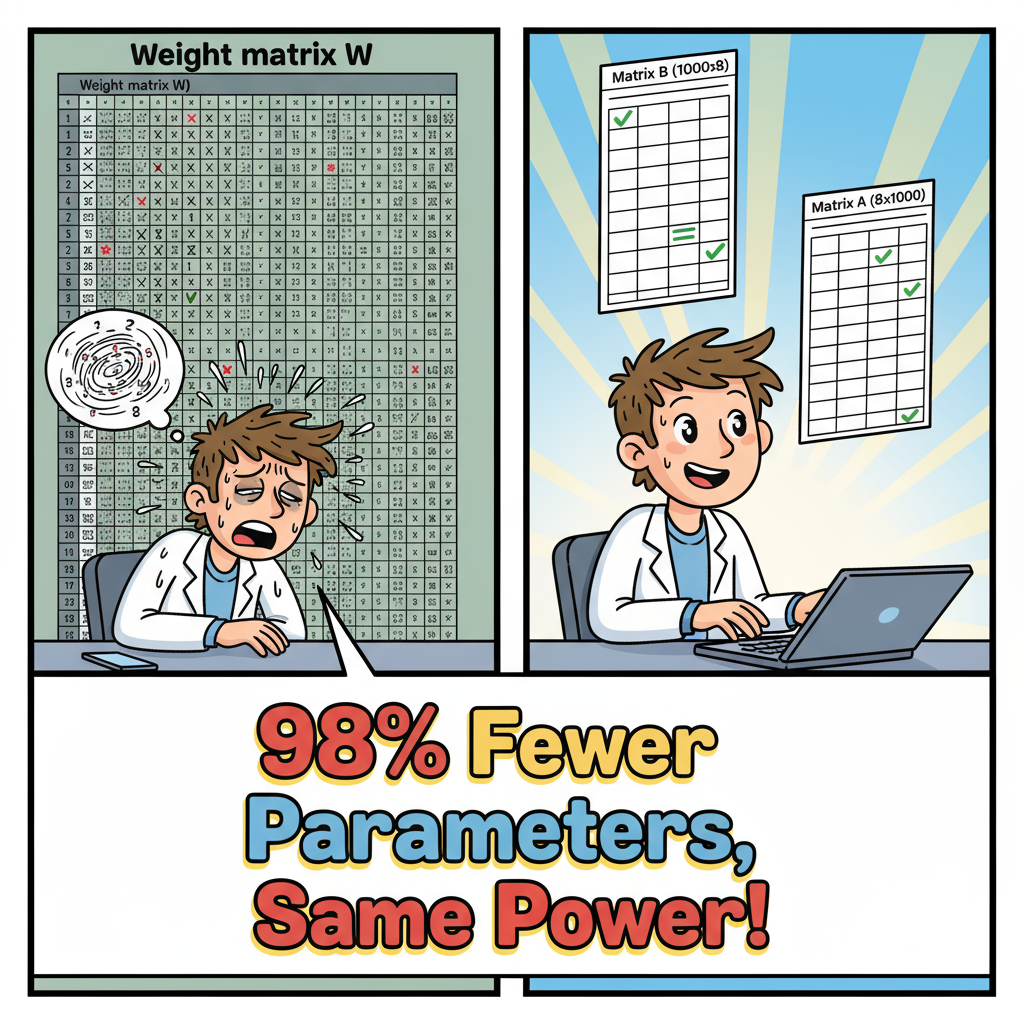

Instead of updating a weight matrix W (which might be 1000×1000 = 1 million parameters), LoRA decomposes the update into two smaller matrices:

- Matrix B (1000×8 = 8,000 parameters)

- Matrix A (8×1000 = 8,000 parameters)

The total trainable parameters drop from 1 million to just 16,000 — a reduction of over 98%. Yet remarkably, this compact representation captures most of the important changes needed for task adaptation.

Understanding LoRA’s Hyperparameters

Two critical parameters control LoRA’s behavior: rank and alpha.

Rank determines the size of those smaller matrices we mentioned. Think of rank as the level of detail in your adaptation. A higher rank (like 64 or 128) allows for more nuanced changes, useful for complex tasks or when your target domain differs significantly from the model’s training data. A lower rank (like 4 or 8) suffices for simpler adaptations. It’s like choosing between a fine-tip pen and a broad brush. Both can create art, but they offer different levels of precision.

Alpha acts as a volume control for the adaptations. It scales how strongly the LoRA modifications influence the final output. The relationship between alpha and rank is crucial. Most practitioners set alpha to either equal the rank or twice the rank. If rank is 8, alpha would typically be 8 or 16. This scaling ensures the adaptations have appropriate influence without overwhelming the original model’s knowledge.

QLoRA: Taking Efficiency to the Next Level

While LoRA dramatically reduces the number of trainable parameters, QLoRA (Quantized LoRA) pushes the boundaries even further by combining LoRA with quantization techniques.

Imagine you’re storing a library of books. Regular storage keeps every word exactly as written. Quantization is like creating a compressed version where common phrases are replaced with shorter codes. You can still read the books (decompress them when needed), but they take up much less shelf space.

QLoRA applies this principle by storing the base model in a compressed 4-bit format instead of the standard 16-bit format. This reduces memory requirements by 75% while maintaining the model’s capabilities. During training, the model is temporarily decompressed for calculations, then compressed again for storage.

The magic of QLoRA lies in its use of Normal Float 4 (NF4) quantization, specifically designed for neural network weights. Unlike standard quantization that might lose important information, NF4 preserves the statistical properties crucial for model performance.

Double Quantization: The Extra Mile

QLoRA introduces another innovation called double quantization. Not only are the model weights quantized, but the quantization constants themselves are also quantized. It’s like compressing a zip file, you’re applying compression to already compressed data. This technique saves additional memory with minimal impact on performance.

Adapter Modules and Other PEFT Techniques

Beyond LoRA, several other PEFT methods deserve attention:

Adapter Modules were among the first PEFT techniques. They insert small trainable layers between the frozen layers of the pre-trained model. Think of them as translators sitting between departments in a company, helping specialized teams communicate with the broader organization. While effective, adapters typically require training more parameters than LoRA (1–3% versus 0.1–1%).

Prefix Tuning takes a different approach by adding trainable tokens to the input. Imagine prefacing every conversation with specific context that guides the model’s responses. These prefix tokens are learned during training and help steer the model toward task-specific behaviours.

BitFit represents the minimalist approach, updating only the bias terms in the model. If the model’s weights are like the slopes of hills, biases are like shifting the entire landscape up or down. Despite updating less than 0.1% of parameters, BitFit can achieve surprisingly good results for certain tasks.

Conclusion: Democratizing AI Customization

The era of accessible AI customization has arrived. Whether you’re building specialized medical assistants, financial advisors, or customer service systems, the tools and techniques covered here provide the foundation for transforming general-purpose language models into powerful, domain-specific solutions.

In our next blog post, we’ll roll up our sleeves and dive into the practical side of this exciting field. We’ll walk through a complete hands-on tutorial where you’ll learn to fine-tune your first large language model using LoRA — from setting up your environment and preparing your dataset to training, evaluation, and deployment. You’ll see real code, work through common troubleshooting scenarios, and by the end, have your own specialized model ready for action. Theory is powerful, but there’s nothing quite like the satisfaction of seeing your custom AI come to life through practical implementation.