Picture this: You’ve just been handed a spreadsheet with 50,000 rows and 20 columns of customer data, your manager wants insights by Friday, and you’re staring at numbers that might as well be hieroglyphics. If this scenario makes your palms sweat, you’re not alone — every successful data scientist has stood at this exact crossroads, wondering how to transform raw data into meaningful insights before building those impressive machine learning models everyone talks about. The secret weapon that separates thriving data scientists from those who struggle isn’t advanced algorithms or complex mathematics — it’s Exploratory Data Analysis (EDA), the systematic art of having meaningful conversations with your data. This comprehensive guide takes you from complete beginner to confident data explorer, covering everything from essential Python libraries and step-by-step workflows to practical code examples, and common pitfalls to avoid. Rather than overwhelming you with theory, we’ll focus on hands-on learning that shows you not just the “how” but the “why” behind each technique, ensuring that by the end, you’ll have the confidence to approach any dataset systematically and uncover insights that others miss. Ready to transform from someone intimidated by data into someone who speaks its language fluently?

What You’ll Learn in This Complete Guide:

- Understanding EDA Fundamentals — What makes exploratory data analysis so powerful and why it’s non-negotiable for data science success

- Essential Python Toolkit — Master pandas, NumPy, Matplotlib, and Seaborn libraries for comprehensive data analysis

- Complete EDA Workflow — Follow a systematic 6-stage process from understanding your data’s structure to generating actionable insights

- Data Quality Assessment — Learn to identify and handle missing values, duplicates, outliers, and data inconsistencies

- Univariate Analysis Techniques — Explore individual variables through descriptive statistics, distributions, and effective visualizations

- Bivariate & Multivariate Analysis — Uncover relationships between variables using correlation matrices, scatter plots, and advanced techniques

- Common EDA Pitfalls — Avoid the mistakes that derail projects and learn professional best practices

What is Exploratory Data Analysis?

Exploratory Data Analysis is the systematic process of investigating datasets to understand their main characteristics, uncover patterns, spot anomalies, and test assumptions using statistical summaries and visualizations.

EDA is about discovery — it’s where hypothesis are born, not proven. The beauty of EDA lies in its detective-like approach. You are uncovering stories hidden within the data. Each visualization tells you something new, each summary statistic reveals another piece of the puzzle.

Why EDA is Non-Negotiable for Data Scientists

Data Quality Assurance

Real-world data is messy, incomplete, and often inconsistent. EDA helps you identify missing values, duplicates, outliers, and errors that could sabotage your models later. Studies show that data scientists spend 80% of their time on data preparation, and EDA is where this critical work begins.

Pattern Recognition

EDA reveals underlying structures in your data that aren’t immediately obvious. Whether it’s seasonal trends in sales data, correlations between customer demographics and purchasing behaviour, or clusters of similar observations, these patterns become the foundation for feature engineering and model selection.

Assumption Validation

Many statistical models and machine learning algorithms rely on specific assumptions about data distribution, independence, and relationships between variables. EDA helps you verify these assumptions before you build models, ensuring your conclusions are valid.

Business Context Integration

EDA connects statistical insights with business realities. It helps you understand whether your findings make practical sense and can guide actionable business decisions.

The Essential EDA Toolkit

Modern EDA relies heavily on Python’s powerful ecosystem of libraries, each serving a specific purpose in your analytical workflow.

Core Python Libraries for EDA

Pandas serves as your data manipulation powerhouse, providing the DataFrame structure that makes data cleaning, filtering, and transformation intuitive. It’s your primary tool for loading datasets, handling missing values, and performing basic statistical operations.

Numpy provides the mathematical foundation, offering efficient array operations and statistical functions that power many of your calculations.

Matplotlib gives you complete control over data visualization, allowing you to create everything from simple line plots to complex multi-panel figures. While it requires more code, it offers unmatched flexibility.

Seaborn builds on Matplotlib to provide statistical visualizations with minimal code. It’s perfect for correlation heatmaps, distribution plots, and categorical data analysis.

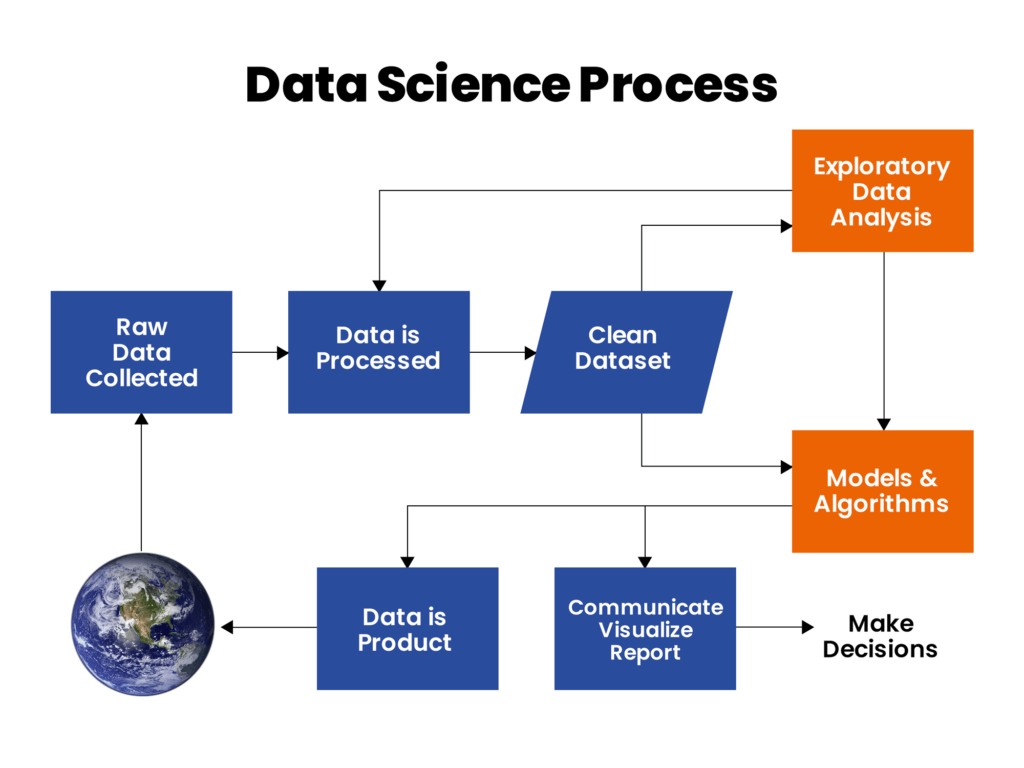

The Complete EDA Workflow: From Raw Data to Actionable Insights

Stage 1: Understanding Your Data

Before diving into analysis, you need to understand what you’re working with. This involves examining the basic structure and characteristics of your dataset.

Start by loading your data and getting familiar with its dimensions. Ask yourself the following questions: How many observations and variables do you have? What types of data are you dealing with — numerical, categorical, or datetime? This initial exploration sets the context for everything that follows.

import pandas as pd

import numpy as np

# Load your dataset

df = pd.read_csv('your_dataset.csv')

# Basic dataset information

print(f"Dataset shape: {df.shape}")

print(f"Data types:\n{df.dtypes}")

print(f"First 5 rows:\n{df.head()}")Understanding data types is crucial because it determines which analytical techniques you can apply. Numerical variables allow for statistical summaries and correlation analysis, while categorical variables require different approaches like frequency analysis and cross-tabulations.

Stage 2: Data Quality Assessment and Cleaning

Real-world data is rarely perfect. This stage focuses on identifying and addressing data quality issues that could impact your analysis.

Missing Value Analysis is your first priority. Missing data can introduce bias and reduce the power of your analysis. Identify which variables have missing values, understand the patterns of missingness, and decide on appropriate handling strategies. To learn about how to handle missing values in detail, checkout my blogs: Mastering Missing Data in Your Datasets — Part 1 and Mastering Missing Data in Your Datasets — Part 2.

# Check for missing values

missing_summary = pd.DataFrame({

'Missing_Count': df.isnull().sum(),

'Missing_Percentage': (df.isnull().sum() / len(df) * 100).round(2)

}).sort_values('Missing_Count', ascending=False)

print(missing_summary)Duplicate Detection helps ensure data integrity. Duplicate records can skew your analysis and lead to overrepresentation of certain observations.

Data Type Validation ensures that your variables are stored in appropriate formats. Dates should be datetime objects, categorical variables should be properly encoded, and numerical values should be in the correct numeric format.

Stage 3: Univariate Analysis — Understanding Individual Variables

This stage focuses on understanding each variable in isolation, examining distributions, central tendencies, and spread.

For Numerical Variables, start with descriptive statistics to understand the center, spread, and shape of your distributions:

# Descriptive statistics

print(df.describe())

# Distribution analysis

import matplotlib.pyplot as plt

import seaborn as sns

plt.figure(figsize=(12, 8))

for i, column in enumerate(numerical_columns):

plt.subplot(2, 3, i+1)

sns.histplot(df[column], kde=True)

plt.title(f'Distribution of {column}')

plt.tight_layout()

plt.show()

Look for skewness, outliers, and unusual patterns. A normal distribution appears bell-shaped, while skewed distributions may require transformation before modelling.

For Categorical Variables, examine frequency distributions and proportions:

# Categorical analysis

for column in categorical_columns:

print(f"\n{column} Distribution:")

print(df[column].value_counts())

print(f"Proportions:\n{df[column].value_counts(normalize=True)}")Stage 4: Bivariate Analysis — Uncovering Relationships

This is where EDA becomes truly exciting. You’ll explore how variables relate to each other, uncovering correlations, dependencies, and interactions.

Numerical vs. Numerical Relationships are best explored through scatter plots and correlation analysis:

# Correlation matrix

correlation_matrix = df[numerical_columns].corr()

sns.heatmap(correlation_matrix, annot=True, cmap='coolwarm', center=0)

plt.title('Correlation Matrix')

plt.show()

Strong correlations (above 0.7 or below -0.7) indicate potential multicollinearity issues for modeling. Moderate correlations (0.3 to 0.7) suggest meaningful relationships worth investigating further.

Categorical vs. Numerical Relationships can be explored using box plots, violin plots, or grouped statistics:

# Box plots for categorical-numerical relationships

plt.figure(figsize=(10, 6))

sns.boxplot(data=df, x='category', y='numerical_variable')

plt.xticks(rotation=45)

plt.title('Numerical Variable by Category')

plt.show(Categorical vs. Categorical Relationships require cross-tabulation and chi-square tests:

# Cross-tabulation

pd.crosstab(df['category1'], df['category2'], normalize='index')Stage 5: Multivariate Analysis — Understanding Complex Interactions

Advanced EDA techniques help you understand how multiple variables interact simultaneously.

Correlation Matrices provide a comprehensive view of all pairwise relationships in your numerical data. Look for clusters of highly correlated variables and unexpected relationships.

Pair Plots create scatter plots for all variable combinations, helping you spot non-linear relationships and outliers:

# Pair plot for comprehensive relationship analysis

sns.pairplot(df[numerical_columns])

plt.show()

Principal Component Analysis (PCA) can help reduce dimensionality and identify the most important patterns in high-dimensional data.

Stage 6: Outlier Detection and Treatment

Outliers can significantly impact your analysis and model performance. EDA helps you identify, understand, and appropriately handle these extreme values.

Statistical Methods like the Interquartile Range (IQR) method provide systematic outlier detection:

# IQR method for outlier detection

def detect_outliers_iqr(df, column):

Q1 = df[column].quantile(0.25)

Q3 = df[column].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

return df[(df[column] < lower_bound) | (df[column] > upper_bound)]Visual Methods like box plots and scatter plots help you understand the context and impact of outliers

Remember that not all outliers should be removed. Some represent genuine extreme values that contain important information. So, always consider the business context before deciding how to handle outliers.

Common EDA Pitfalls and How to Avoid Them

Data science projects fail for many reasons, but surprisingly often, the problems start right at the beginning during exploratory analysis. These aren’t complex theoretical issues — they’re basic mistakes that happen repeatedly across organizations and skill levels. Understanding these patterns can save tremendous time and frustration down the road.

The Impatience Problem

Most data science projects operate under tight deadlines, which creates natural pressure to move quickly toward modeling and results. This urgency often leads teams to treat EDA as a brief checkpoint rather than a fundamental investigation phase. The consequences show up later when models perform poorly on real data or when stakeholders question findings that don’t align with business reality.

Research consistently shows that data preparation and understanding consume the majority of time in successful data science projects — typically 60–80% of the total effort. Teams that allocate sufficient time upfront for thorough exploration tend to build more robust models and generate more actionable insights. The initial time investment pays dividends throughout the project lifecycle.

Context Blindness

Data doesn’t exist in isolation, yet many analyses proceed as if the numbers emerged from nowhere. Every dataset has an origin story involving specific collection methods, business processes, and external factors that influence the patterns within. Ignoring this context leads to misinterpretation of trends and relationships.

Consider seasonal businesses where normal patterns shift dramatically during certain periods, or datasets that span major organizational changes like mergers, product launches, or market disruptions. These contextual factors often explain apparent anomalies in the data and provide crucial insights for model building. Successful EDA involves understanding not just what the data shows, but why it shows those patterns.

Surface-Level Analysis

The most readily apparent patterns in data are often the least valuable for decision-making. Confirming that large customers spend more money or that promotional periods drive sales increases provides little actionable insight because these relationships are already well understood by business stakeholders.

Value emerges from deeper investigation that reveals unexpected relationships, identifies underperforming segments, or uncovers hidden patterns that contradict conventional wisdom. This deeper analysis requires moving beyond basic summary statistics and correlation coefficients to explore interactions, segment-specific behaviors, and time-dependent patterns that might not be immediately obvious.

Visualization Missteps

Poor visualization choices can obscure important patterns or mislead audiences about the data’s true story. Common problems include inappropriate chart types for the data structure, overwhelming audiences with too much information in single displays, and inconsistent scaling or formatting that makes comparisons difficult.

Effective visualizations serve the dual purpose of exploration and communication. During exploration, they help analysts spot patterns and anomalies quickly. For communication, they need to convey specific insights clearly to audiences with varying technical backgrounds. The best approach involves matching visualization types to both the data structure and the intended message.

Misinterpreting Relationships

Statistical relationships between variables can suggest interesting connections, but they don’t establish causation. This distinction becomes particularly important when EDA findings influence business decisions or policy recommendations. Strong correlations might reflect common underlying causes, reverse causation, or pure coincidence rather than direct causal relationships.

Professional practice involves clearly distinguishing between observed associations and causal claims. When presenting correlation findings, successful analysts discuss potential explanations, acknowledge limitations, and suggest approaches for further validation. This transparency builds credibility and prevents overinterpretation of exploratory findings.

Inadequate Quality Assessment

Data quality issues can fundamentally undermine analysis results, yet they’re often discovered late in projects when addressing them becomes costly and time-consuming. Missing values, inconsistent formatting, duplicate records, and measurement errors all create potential problems for downstream analysis.

Comprehensive quality assessment involves systematic checking for completeness, consistency, accuracy, and validity. This includes understanding missingness patterns, identifying outliers and their potential causes, and validating that data values fall within expected ranges. Addressing quality issues early prevents more serious problems during modeling and interpretation phases.

Avoiding These Common Traps

Recognition of these patterns suggests several protective strategies. Building sufficient time into project timelines for thorough exploration helps resist the pressure to rush toward modeling. Engaging with domain experts and data generators provides essential context for interpreting patterns correctly.

Developing systematic approaches to quality assessment ensures that data issues are identified and addressed early. Focusing exploration efforts on finding unexpected or counterintuitive patterns increases the likelihood of generating valuable insights. Finally, maintaining clear distinctions between observed relationships and causal claims helps prevent overinterpretation of exploratory findings.

These practices become more natural with experience, but they require conscious attention and discipline, especially under project pressure. Teams that consistently apply these principles tend to produce more reliable and actionable data science results

Conclusion

Exploratory Data Analysis is more than a technical skill — it’s an art form that combines statistical knowledge, business acumen, and creative thinking. The insights you uncover during EDA will guide every subsequent decision in your data science project, from feature engineering to model selection to business recommendations.

Remember that EDA is an iterative process. Each question you answer will lead to new questions, each visualization will suggest new analyses, and each insight will deepen your understanding of the data. Embrace this journey of discovery, and don’t rush to conclusions.

The datasets you encounter in your career will be as diverse as the problems you’re asked to solve. Some will be clean and well-structured, others will be messy and incomplete. Some will tell clear stories, others will require deep investigation to reveal their secrets. Your EDA skills will be your constant companion through all these challenges.

Start with simple datasets and basic techniques, then gradually increase complexity as your confidence grows. Focus on understanding the business context behind your data, and always ask yourself: “What story is this data trying to tell me, and how can I help others understand it?”.

The journey from raw data to actionable insights begins with a single step: loading your first dataset and asking your first question. What patterns will you discover? What insights will drive your next business decision? What problems will you solve with your newfound understanding of data?

Your exploration starts now. Load a dataset, fire up your Python environment, and begin the most rewarding conversation of your data science career.