One of the most common questions asked by all machine learning practitioners from students to professionals would be which model to choose for their problem statement. There are so many models out there, and with new open source ones coming in every minute, its hard to decide on one to proceed with training. Even if you fix your mind on one, its hard to not doubt yourself on whether something else would have performed better.

This comprehensive guide will walk you through the decision-making process in simple terms, helping you understand the choice of traditional machine learning models in various real-world scenarios.

Please note that this article only discusses the basic traditional algorithms and would better be viewed as a theoretical guide to understand the concept. With the evolution of Deep Learning Models and open source platforms like Hugging Face, there are so many new models out there, that might serve as a better choice. However, it is always good to know the basics to lay a foundation on.

Machine Learning Fundamentals Recap

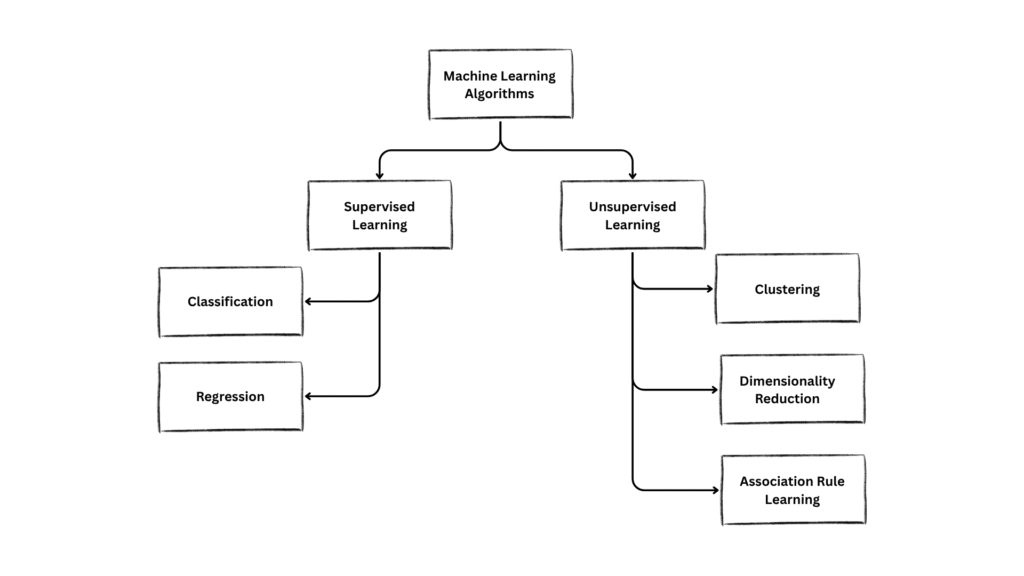

Here is a recap of the basic categories of machine learning problems:

Key Factors Influencing Model selection

Type of Dataset

The type of data you’re working with significantly influences your model choice. The dataset you are using might be text, numerical, image, audio, or video based on the problem statement. Some models are specific to a certain type of data. Hence, the type of data you are using can narrow down your list of models to choose from significantly.

Type of Problems

The models can further be classified based on the type of problem we are dealing with. As shown in the figure above, Machine Learning Algorithms can be broadly classified into 2 types:

- Supervised-Learning

- Unsupervised-Learning

Supervised learning algorithms can further be classified into 2 types:

- Classification

- Regression

Unsupervised learning algorithms can be further classified as:

- Clustering

- Dimensionality Reduction

Lets not discuss these problems in detail as its beyond the scope of this blog’s agenda. Lets stick to the choice of models for now.

Classification Problems

Numerical Data(Traditional Machine Learning)

The following machine learning algorithms can be considered for training numerical and tabular data for classification problems.

- Decision Trees

- Support Vector Machines

- Naives Bayes

- Random Forests

- K-Nearest Neighbours

- Logistic Regression

Text Data (Natural Language Processing)

- Support Vector Machines

- Naives Bayes

- K-Nearest Neighbours

- Logistic Regression

Image (Computer Vision)

- Decision Trees

- Support Vector Machines

- Naives Bayes

- Random Forests

- K-Nearest Neighbours

- Logistic Regression

Audio (Sound Processing)

- Decision Trees

- Support Vector Machines

- Random Forests

- K-Nearest Neighbours

Video (Temporal Visual Information)

- Decision Trees

- Support Vector Machines

- Random Forests

- K-Nearest Neighbours

Regression Problems

Numerical Data(Traditional Machine Learning)

- Linear Regression

- Ridge and Lasso Regression

- Polynomial Regression

- Decision Trees

- Random Forest Regression

- Support Vector Regression

- k-Nearest Neighbors Regression

Other forms of data (Text, Image, Audio)

Text, image, and audio data must be converted into numerical features before applying traditional regression models. This process is called feature extraction and is essential because most machine learning algorithms, including regression, require numerical input.

Some examples of Feature Engineering techniques for different forms of data are:

Text: Bag-of-words, TF-IDF, embeddings, etc

Image: Color histograms, texture, SIFT, HOG

Audio: MFCCs, spectograms, chroma features

Once text, image, or audio data are numerically represented, most traditional regression models can be applied.This includes linear regression, ridge/lasso regression, polynomial regression, decision trees, random forests, support vector regression (SVR), and k-nearest neighbors regression.

However, some regression models have assumptions or requirements that may not be met by all types of feature-extracted data:

- Linear regression and its variants assume linear relationships and may not perform well if the extracted features do not capture the necessary information or if the relationship is highly non-linear.

- Poisson regression is specifically designed for count data and hence, is not generally considered suitable for text, image, or audio regression tasks, even after feature extraction.

- Also, some specialized statistical models may require the data to be inherently numerical and may not generalize well to features derived from unstructured data like text, image, or audio.

Video (Temporal Visual Information)

- Linear Regression

- Regression Trees

- Random Forests

- Support Vector Regression

- Time Series Models

Clustering

Clustering is an unsupervised learning technique that is used for discovering hidden patterns and and structures within unlabelled data. As the name suggests, it works by grouping similar data points and separating dissimilar ones thereby creating clusters of data. This technique is commonly used for anomaly detection, customer segmentation tasks, etc. Some of the commonly used clustering techniques are: K-Means clustering, Hierarchical clustering, DBSCAN (Density Based Spatial Clustering of Applications with Noise), etc.

Dimensionality Reduction

Dimensionality Reduction transforms high dimensional data into low dimensional data there by reducing computational complexity and memory requirements. This technique is used as a preprocessing technique to reduce the size of the dataset by removing irrelevant data while preserving essential information that is relevant to the problem statement. There are multiple dimensionality reduction techniques, the most common ones being: PCA (Principal Component Analysis), t-SNE (t-Distributed Stochastic Neighbor Embedding), UMAP (Uniform Manifold Approximation and Projection).

Size of the Dataset

The size of your dataset is probably the most important factor when picking an unsupervised learning method. Different algorithms handle data sizes in completely different ways, and what works great for a small dataset might crash your computer with a large one.

When You Have Small Datasets (Under 1,000 Data Points):

If you’re working with a smaller dataset, you have more freedom to choose computationally intensive methods. Hierarchical clustering becomes a viable option here because even though it’s slow, it won’t take forever with fewer data points. You can also use t-SNE for creating beautiful visualizations since it won’t bog down your system. Kernel PCA is another good choice when you suspect your data has complex, non-linear patterns that regular PCA might miss.

Medium-Sized Datasets (1,000 to 100,000 Data Points):

This is the sweet spot where you have enough data to find meaningful patterns but not so much that everything becomes impossibly slow. K-Means clustering really shines here — it’s fast enough to run quickly but has enough data to create stable, reliable clusters. For reducing dimensions, regular PCA works wonderfully and runs in reasonable time. DBSCAN is also a great choice if your data has weird shapes or lots of noise that other methods struggle with.

Large Datasets (Over 100,000 Data Points):

When you’re dealing with big data, your options become more limited because many algorithms simply can’t handle the computational load. K-Means remains one of your best friends here because it scales well and doesn’t consume excessive memory. PCA is still your go-to for dimension reduction since it handles large datasets efficiently. UMAP becomes much more practical than t-SNE for visualization because it’s designed to work with bigger datasets. Unfortunately, methods like hierarchical clustering become practically impossible due to their enormous computational requirements.

Model Complexity

Every machine learning algorithm sits somewhere on a spectrum between simple and complex, and this choice significantly impacts how you’ll use and understand your results.

Simple Approaches (Like PCA and K-Means):

These methods are straightforward to understand and explain to others. They run quickly, use less computer memory, and give consistent results every time you run them. The trade-off is that they might miss subtle patterns in your data because they make basic assumptions about how your data is structured. Think of them as reliable workhorses — they may not catch every nuance, but they’ll get the job done efficiently and predictably.

Complex Approaches (Like t-SNE and Advanced Clustering):

These methods can discover intricate patterns and relationships that simpler approaches miss entirely. They’re particularly good at handling data that doesn’t follow standard assumptions. However, they require more computational power, take longer to run, and often produce results that are harder to interpret or explain to others. They also typically have more settings you need to adjust, which means more opportunities for things to go wrong.

The key is matching the complexity of your method to your specific needs. If you need quick insights and can accept some limitations, go simple. If you have time and computing power and need to uncover subtle patterns, complexity might be worth it.

Computational Resources

Understanding how different algorithms use your computer’s resources helps you choose methods that will actually finish running without crashing your system.

Memory Usage Patterns:

Some algorithms need to store a lot of information in your computer’s memory while they work. Linear methods like PCA and K-Means are generally memory-friendly because they only need to store essential information about your data. On the other hand, methods that calculate distances between every pair of data points (like hierarchical clustering) can quickly consume all available memory as your dataset grows.

Processing Power Requirements:

Different algorithms put varying demands on your computer’s processor. Some methods can take advantage of multiple processor cores to speed up calculations, while others must work sequentially. If you’re working with real-time applications where speed matters, you’ll want algorithms that can provide quick answers rather than those that need hours or days to complete.

Training Time

The amount of time different algorithms need to complete their work varies dramatically, and this affects which methods are practical for your situation.

Quick Methods (Minutes or Less):

K-Means typically finds its clusters within just a few rounds of adjustments, making it very time-efficient. PCA completes its work in a single mathematical operation, so it’s consistently fast. These methods are perfect when you need results quickly or when you’re exploring data interactively.

Moderate Time Requirements (Minutes to Hours):

Methods like DBSCAN and UMAP fall into this category. They take longer than the fastest algorithms but still complete their work in a reasonable timeframe. These are good choices when you can afford to wait a bit longer for potentially better results.

Slow Methods (Hours to Days):

t-SNE and hierarchical clustering can take very long times, especially with larger datasets. While they might produce excellent results, they’re only practical when you can afford to wait and when the improved quality justifies the time investment.

The key insight here is that there’s no universally “best” algorithm — only algorithms that are best suited to your specific combination of data size, computational resources, time constraints, and quality requirements. Start by honestly assessing these practical constraints, then choose methods that fit within them while still meeting your analytical needs.

Practical Considerations

Model selection also requires taking into account some real-world considerations such as:

- Scalability — Can the model handle data growth that may happen over time?

- Computational Resources — Are there any limits on time, memory, or hardware resources availability that needs to be considered?

- Deployment and Maintenance — Is the model stable? Is it easily deployable and update in production?

These factors can highly influence the choice of models. Also, the process of selecting models may not happen in one-shot. It is mostly an iterative process. You may start experimenting with a simple model and then progress to more complex ones while continuously documenting their performance and results for comparison.

Conclusion

Choosing the right machine learning model requires careful consideration of multiple factors: your problem type, data characteristics, computational constraints, and performance requirements. The key is to start simple, understand your data thoroughly, and systematically experiment with different approaches.

The field of machine learning continues to evolve rapidly, with new algorithms and techniques emerging regularly. Stay curious, keep learning, and remember that the best model is one that solves your specific problem effectively while meeting your practical constraints