AI has been the buzz word for a while now and yet people who are starting out in the AI world can find all the technical terms a bit overwhelming. I found it quite hard to figure out what to learn and where to start from when I first started focusing on Generative AI. We hear about Models like GPT, Claude, Ollama, and then there are more terms you hear frequently like LangChain, HuggingFace, LangGraph, Vector Databases, etc. The list goes on and on. At some point you wonder where to start from and how to make the connection between these terms. Some even give up and tune out saying “Well, this is not my cup of tea”. But the truth is, its simpler than you think. So lets start from the basics of the basics and try to just understand one term today. What is a Large Language Model?

What is a Large Language Model?

Think of a Large Language Model (LLM) as the world’s most sophisticated autocomplete system. You know how your phone suggests the next word when you’re texting? An LLM does something similar, but it’s been trained on almost the entire internet and has a “brain” vastly more complex than any human’s. At its core, an LLM is a prediction machine that asks one simple question: “Given these words, what’s the most likely next word?”. That’s it. But don’t let the simplicity fool you. This basic function, when performed billions of times with incredible sophistication, creates something that feels almost magical.

Large Language Models are a type of artificial intelligence designed to understand and generate human-like text. They belong to a broader category called foundation models, which are trained on massive amounts of data to produce adaptable output. These models can contain over a trillion parameters, which are the numerical settings that store everything they’ve learned.

How LLMs Learn

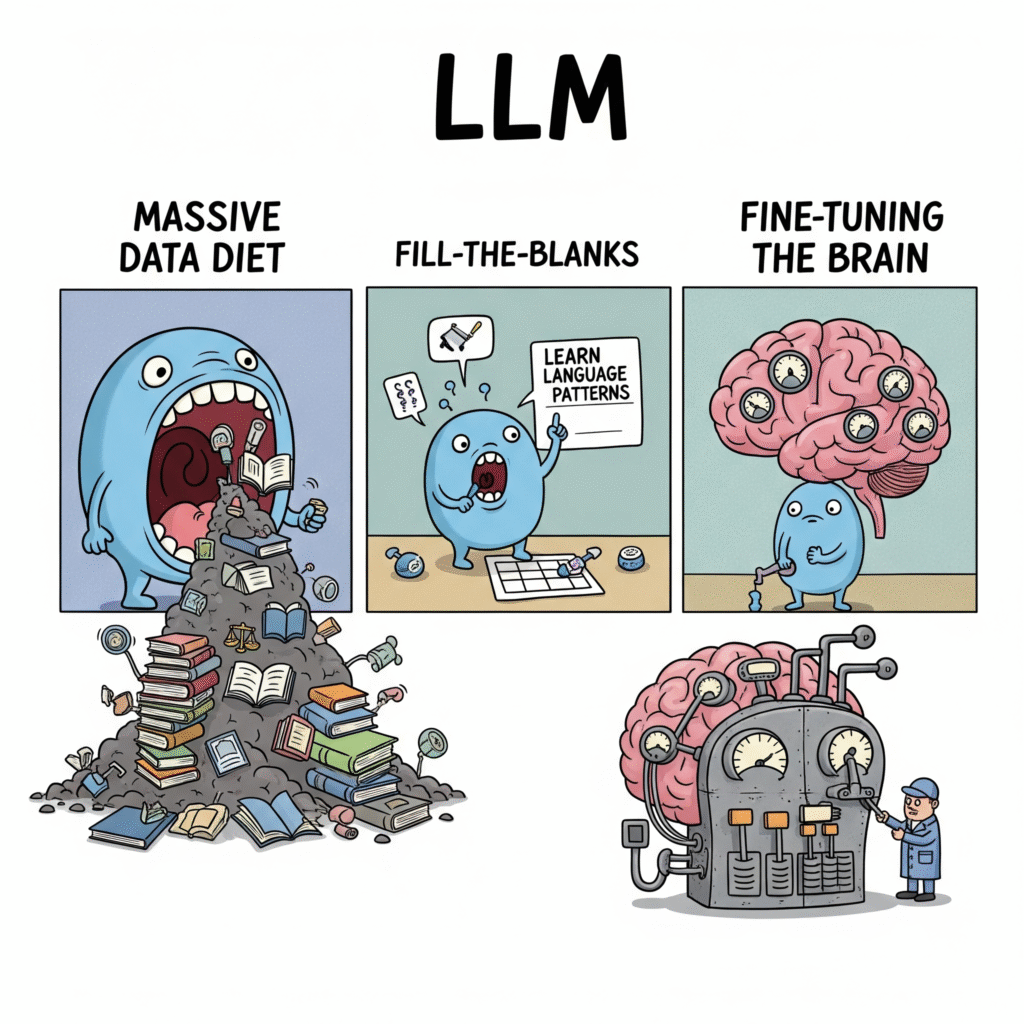

The training process happens in three main stages, much like how a student might learn to write.

Stage 1: The Massive Data Diet

First, the model reads an enormous amount of text. We’re talking about trillions of words from books, websites, articles, and even code. To put this in perspective, imagine reading every book ever written, then reading them thousands of times over. That’s the scale we’re dealing with.

Stage 2: Playing Fill-in-the-Blank

During training, the model constantly plays a guessing game. It sees sentences like “The cat sat on the ___” and tries to predict whether the next word should be “mat,” “couch,” or “floor”. It does this billions and billions of times with increasingly complex examples.

Stage 3: Fine-Tuning the Brain

Every time the model guesses wrong, it makes tiny adjustments to its internal settings. Think of billions of tiny dials being fine-tuned based on feedback. When it guesses correctly, it reinforces those settings. This process continues until the model becomes remarkably good at predicting patterns in language.

Conclusion

Well, thats all for today! Lets keep it simple and learn slowly. Small chunks of info are easier to understand and feed in memory than large essays of data. After all unlike LLMs, we are only humans! If you liked this article be sure to give me a few claps.