Picture this:

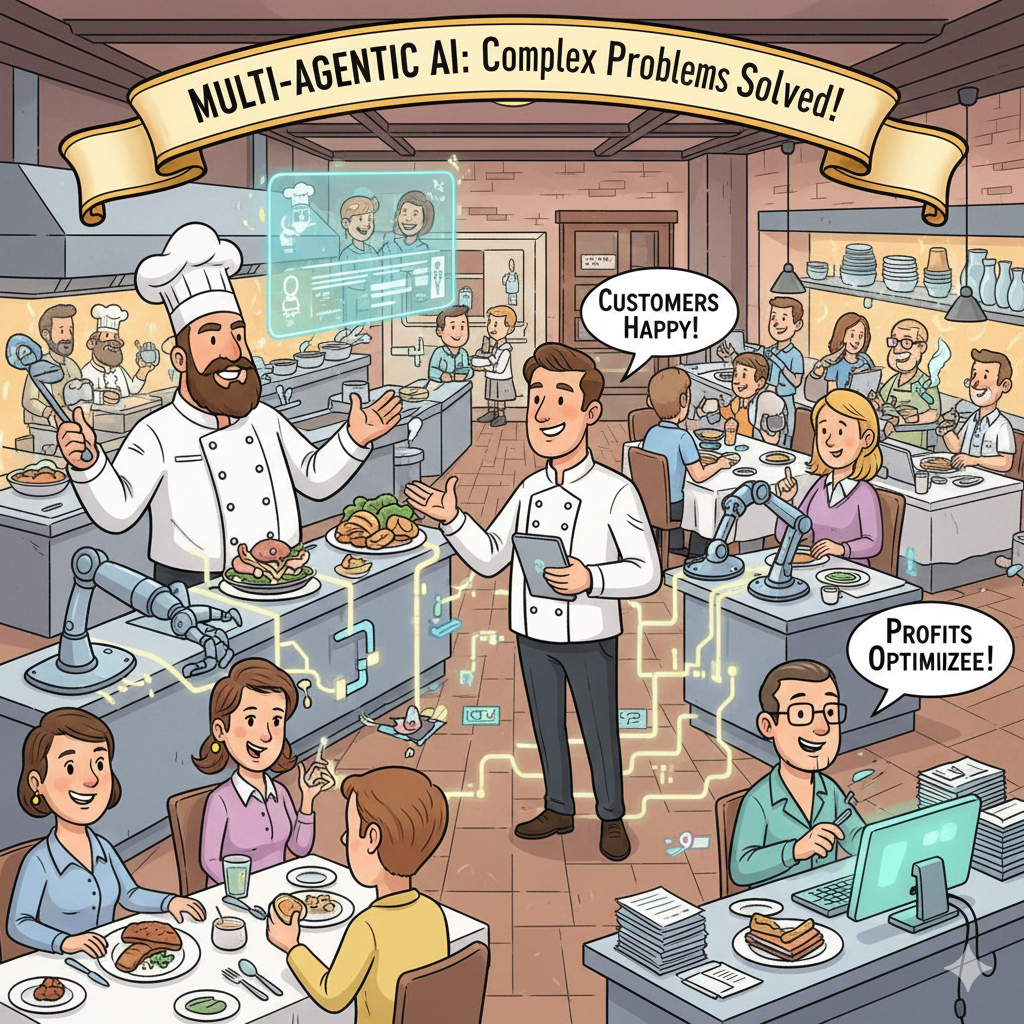

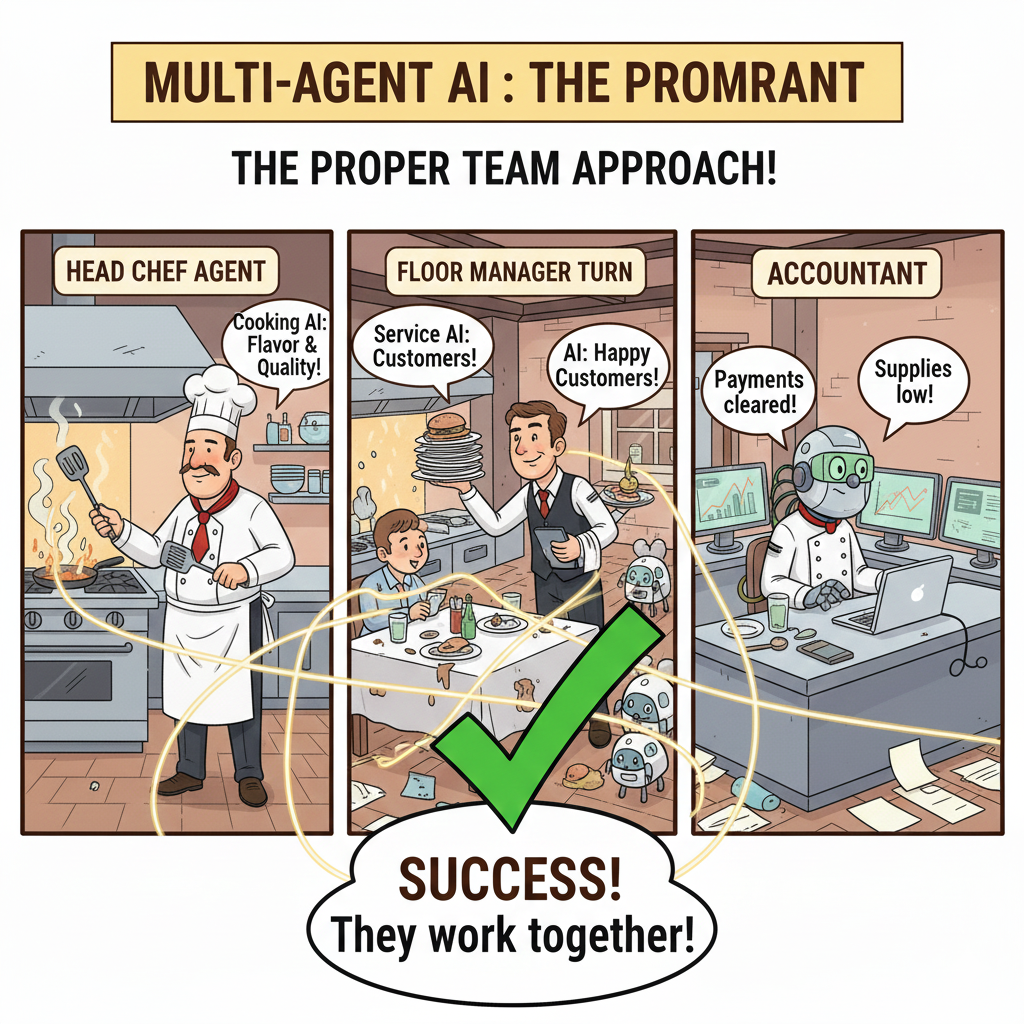

You’re running a bustling restaurant. You could theoretically hire one person who knows everything — cooking, serving, accounting, ordering supplies. But that’s unrealistic. Instead, you would hire a head chef to run the kitchen, a floor manager to handle customers, and a bookkeeper to manage finances. Each person specializes in their area, talks to the others when needed, and together they accomplish far more than any one person could. That’s basically what multi-agentic AI does. It breaks down complex problems into specialized components that work together.

If you’re studying computer science, you’ve probably heard the buzz around agentic AI. But what actually is it? And why should you care? In this guide, we’ll walk through what agentic systems are, how they work, and what they can actually do. We’ll use real examples, practical scenarios, and honest talk about both the promise and the pitfalls.

What’s Agentic AI

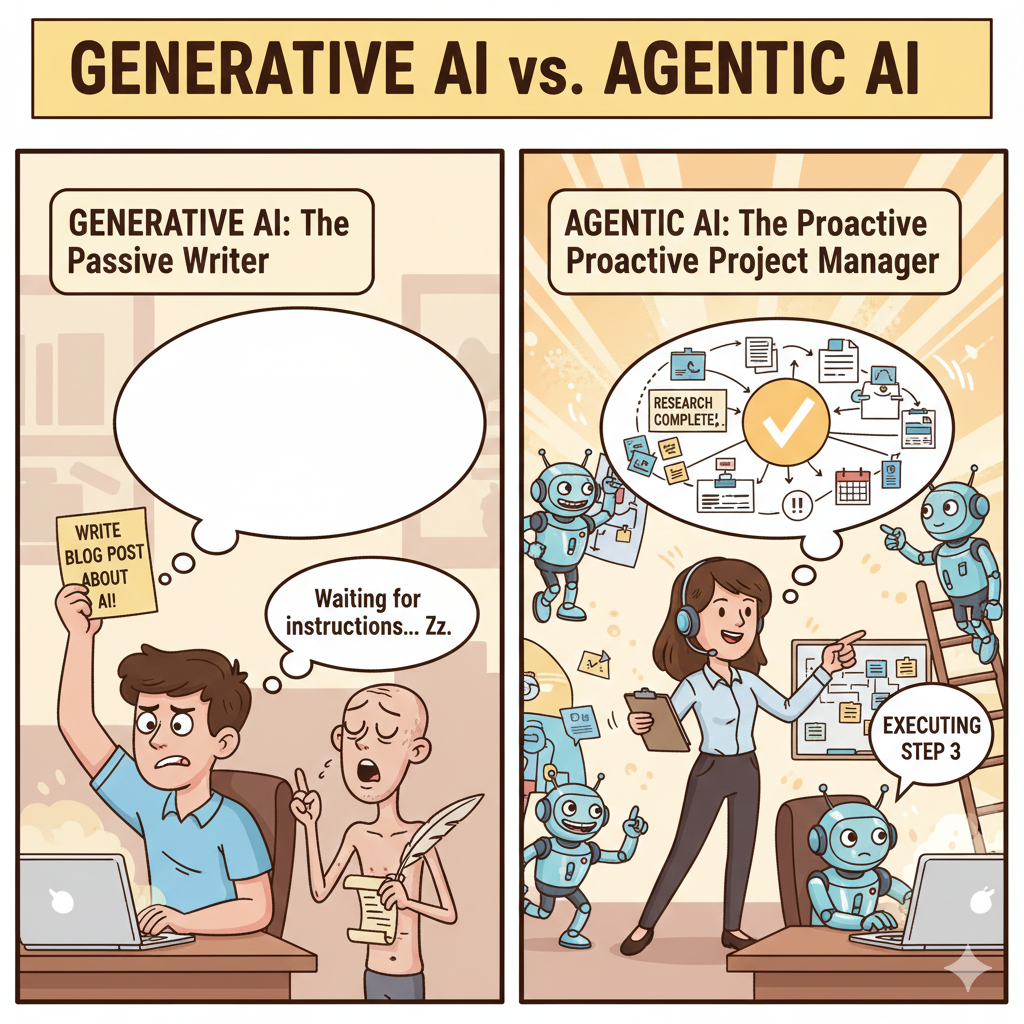

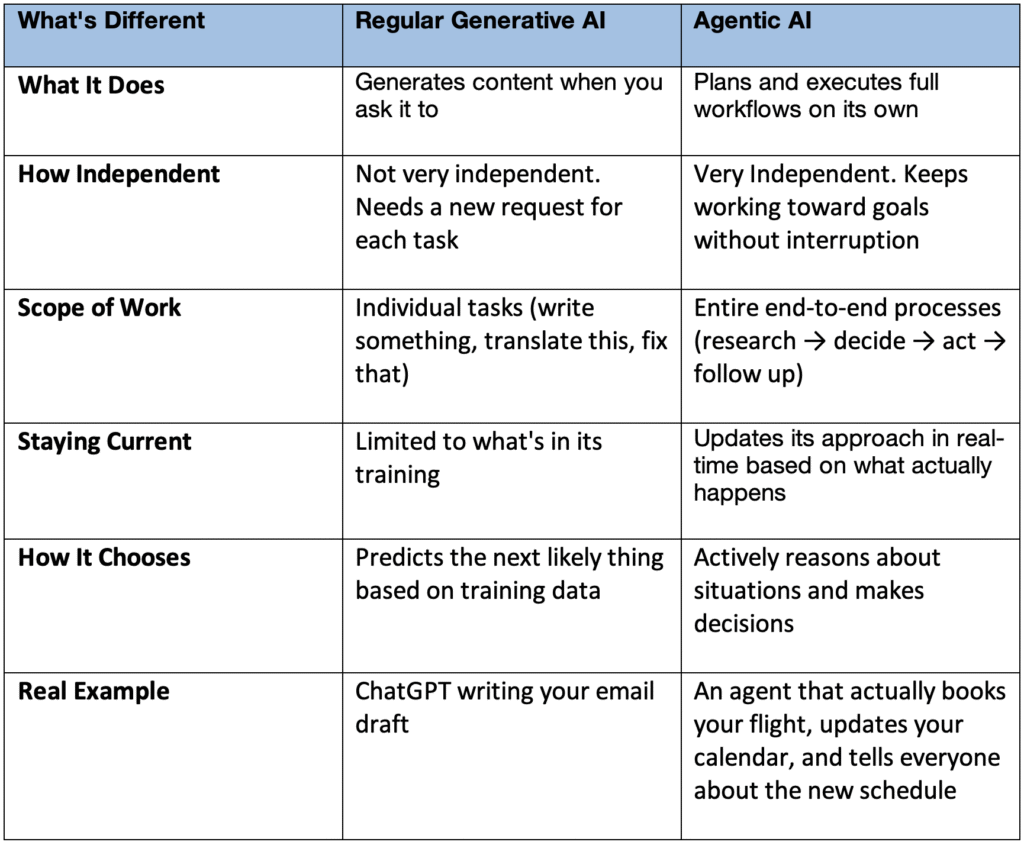

Think about how you normally interact with AI today. You ask ChatGPT (or any chatbot of your choice) a question. It gives you an answer. That’s it. The AI doesn’t do anything other than generate text in response to your prompt.

Agentic AI works fundamentally differently. These systems can operate independently to achieve goals, make decisions, and take action with relatively little human hand-holding. They’re not just passive responders. They actively look at what’s happening, figure out what needs to be done, break it down into steps, and execute those steps, often multiple times, adjusting as they go based on what they discover.

A better way to think about it: Generative AI is like a writer who only writes when you specifically ask them to. Agentic AI is more like a project manager. It understands what you’re trying to accomplish, figures out the steps needed, handles the tasks, deals with problems as they come up, and delivers results without you micromanaging every detail.

What Makes an Agentic System Tick?

If you looked under the hood of any agentic system, you’d find these four essential ingredients:

Observation: The agent checks out its surroundings and gathers information. This might mean pulling data from an API, querying a database, reading files, or getting input from a user. It’s basically the agent’s sensory system.

Understanding and Analysis: The system uses an LLM as its decision-making core. It looks at what it observed and tries to make sense of it — figuring out what’s happening and what needs to happen next.

Planning: Here’s where the agent breaks down big, complicated goals into smaller, achievable steps. It thinks about what resources it has available and what sequence of actions makes sense.

Doing: The agent actually executes its plan. It calls APIs, runs code, updates databases, sends emails — whatever the situation requires. This is what separates agentic systems from all the other AI that just talks about doing things.

Multi-Agent Systems: Why One Brain Isn’t Always Enough

The Basic Idea

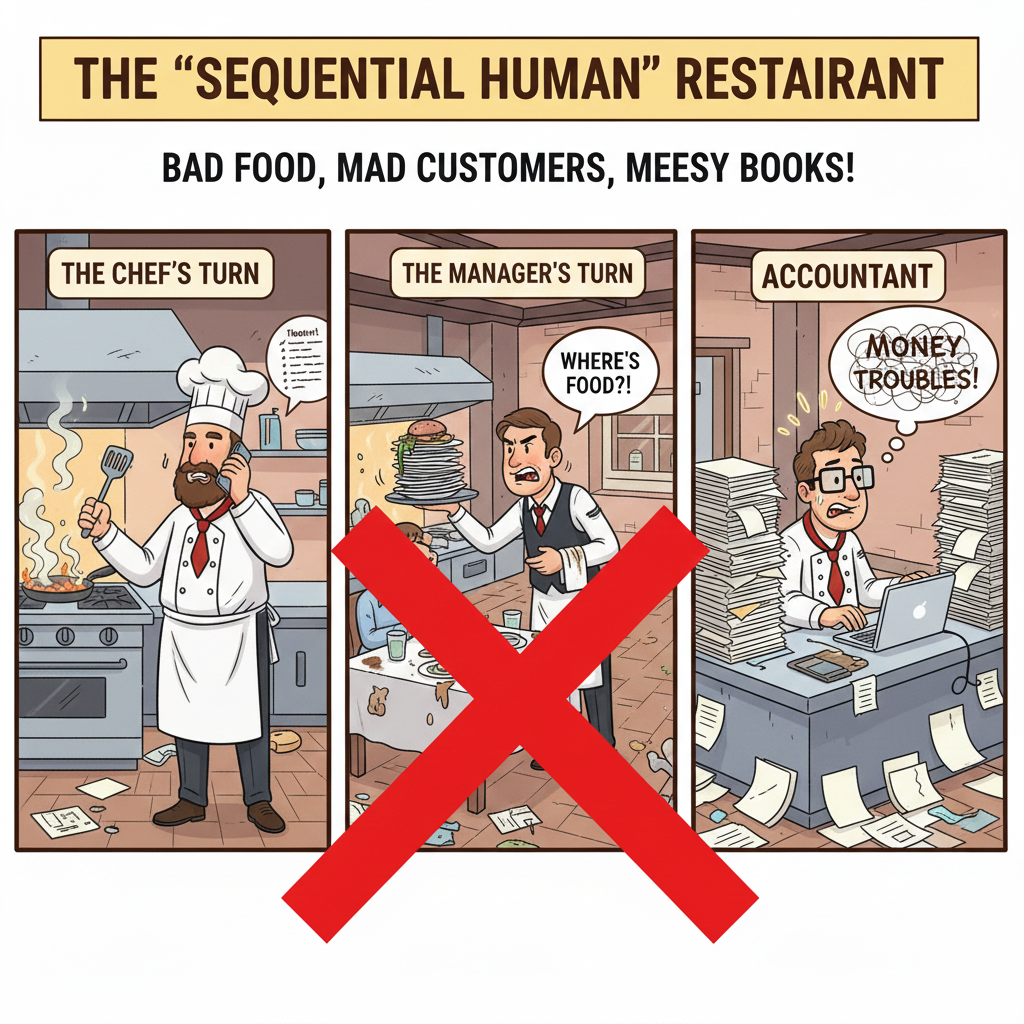

Here’s another restaurant metaphor. Imagine if you required the chef, manager, and accountant to be literally the same person, doing each job sequentially. You’d get bad food, frustrated customers, and messy books; one person can’t truly excel at everything simultaneously.

Multi-Agent Systems work like a proper team instead. You have multiple specialised AI agents, each with particular skills and focus areas, working together and talking to each other to tackle difficult problems. Instead of one monolithic system, you’ve got a distributed network where different agents handle different aspects of the challenge.

Why Specialisation Matters

Think about what happens when you specialise:

- The head chef is a cooking expert, not an accountant.

- The accountant understands finance deeply, but probably shouldn’t be plating desserts.

- The manager focuses on customer experience and team coordination

In multi-agent systems, each component can specialise similarly:

- Maybe you’ve got a Data Retrieval Agent that’s really good at querying databases and fetching information.

- A Planning Agent that excels at breaking complicated tasks into logical steps.

- An Execution Agent that handles calling APIs and managing systems.

- A Checking Agent that verifies results and catches mistakes.

This specialization creates real benefits:

Deeper Expertise: Each agent can be fine-tuned for its particular job, producing better, more accurate results.

Growing With Demand: You can add new agents to the system as you need new capabilities. No need to rebuild the entire architecture, just plug in a new specialist.

Resilience: When one agent has a problem, the others keep running. You don’t get total system collapse; the distributed approach handles failures gracefully.

Speed: Agents can work on different parts of a problem simultaneously rather than everything happening in sequence, cutting execution time substantially.

The Real Mechanics: Tools and Chains

Understanding how agents actually function requires looking at two key mechanisms: tools and chains. These are how agents actually connect to the real world and coordinate complex work.

Tools: How Agents Actually Get Things Done

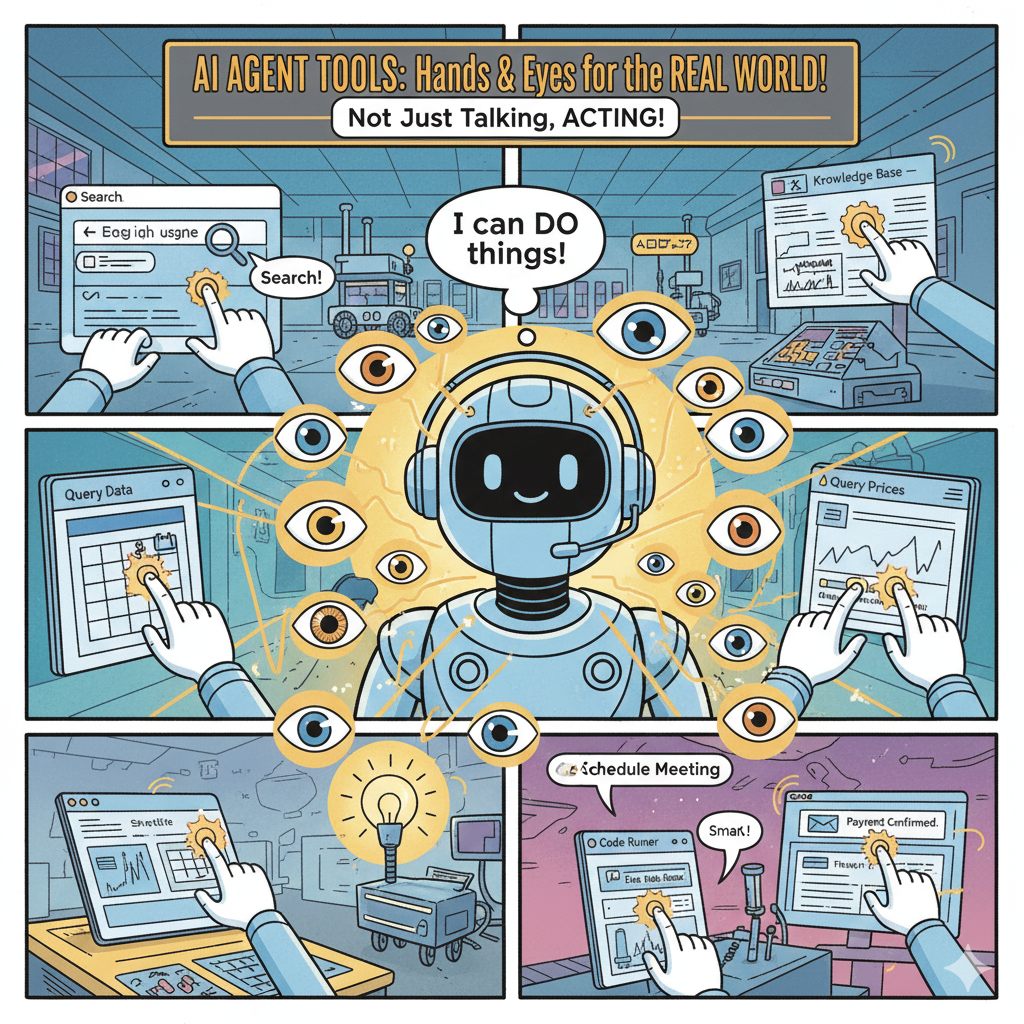

Here’s the critical thing about AI language models: without tools, they’re just talking to themselves. An LLM can reason, write, explain — but it can’t actually do anything in real systems. Tools solve that problem completely.

What Are These Tools Exactly?

A tool is basically a function or connection to an API that an agent can use to accomplish concrete tasks or grab information. Think of them as the agent’s hands and eyes. Tools can be many different things:

- Data Sources: Search engines, database connections, knowledge bases, Wikipedia APIs, company document retrieval systems.

- Computational Power: Math functions, data transformation, code runners.

- Real Systems: Email systems, payment processors, calendar apps.

- Hardware Interaction: Robot controls, smart home devices, IoT equipment.

How Function Calling Actually Works

Modern language models use function calling to work with tools. Here’s the practical flow:

- Describe the Tools: Developers write out available tools using a structured format (usually JSON Schema).

- Model Learns About Them: The LLM reads these descriptions and learns what tools exist and what they do.

- Smart Deciding: When handling a customer request, the model figures out which tool(s) would help.

- Structured Request: Instead of just making up an answer, the model outputs a structured function call specification (formatted JSON saying “call this function with these specific values”).

- Actually Execute: The system reads this structured request and runs the real tool.

- Feedback Loop: The tool’s result comes back to the model, which uses it to keep reasoning.

Chains: Stringing Together Complex Work

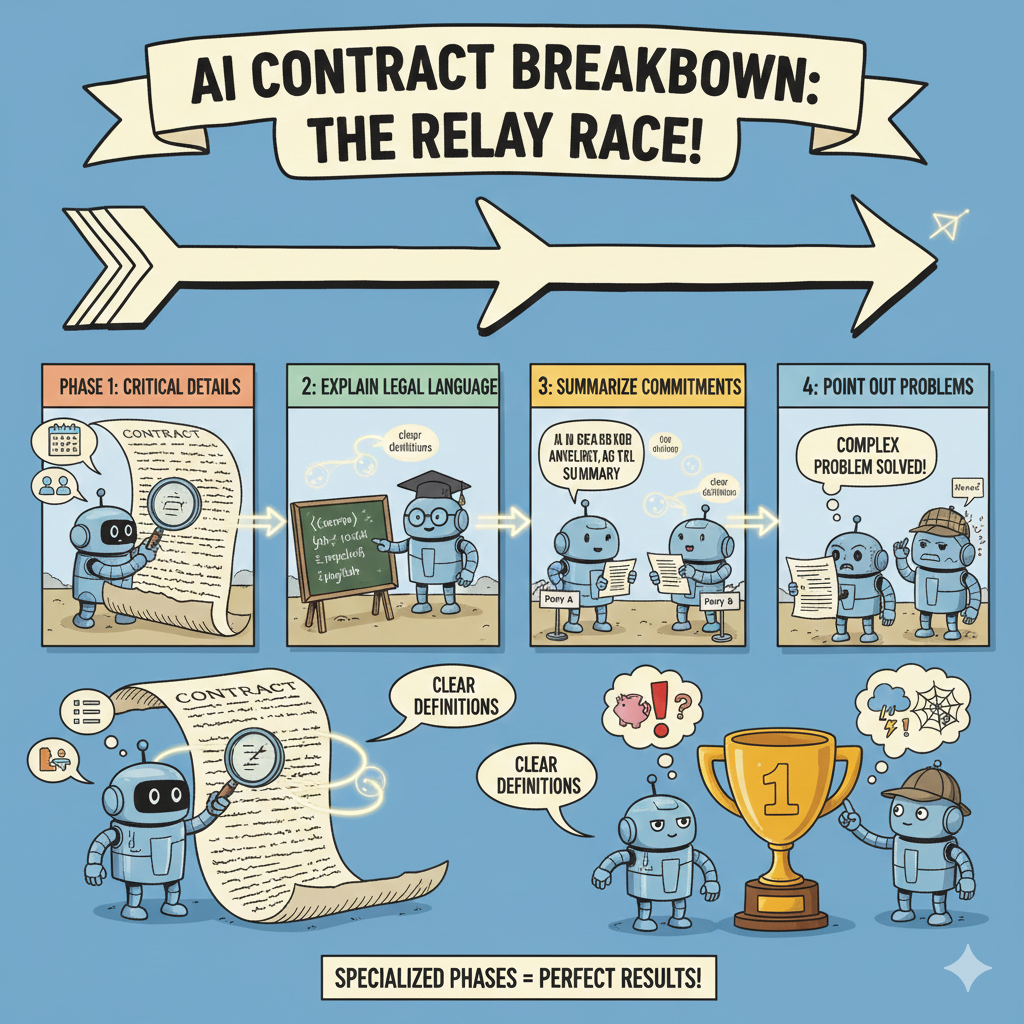

While tools let agents do individual actions, chains let them orchestrate sequences of actions that build toward complicated goals. Chains solve a fundamental problem: LLMs don’t perform well on very complicated tasks if you ask them to do everything at once.

Breaking Down Complex Work

Prompt chaining (also called agentic chaining) tackles this by slicing complex problems into a sequence of smaller, focused steps where each step’s results feed into the next one. Instead of throwing everything at the model at once and hoping it works, you create a workflow of specialized prompts.

Let’s Walk Through an Example

Suppose you want an AI to work through a complicated contract and create a summary. That’s complex, so you break it into a chain:

Phase 1: Pull Out the Critical Details

- Instruction: “Go through this contract and identify all the dates, who the parties are, and what each party is agreeing to do”

- Result: Organized list of key information

Phase 2: Explain the Legal Language

- Instruction: “Take these legal phrases and explain what they actually mean in everyday English: [the terms from Phase 1]”

- Result: Clear definitions

Phase 3: Summarize Commitments

- Instruction: “Using what we found, write out a paragraph for each party explaining what they’re committing to”

- Result: Clear statement of obligations

Phase 4: Point Out Problems

- Instruction: “Given all these obligations, what could go wrong or be risky?”

- Result: Risk analysis

Each phase focuses on one thing, which makes the whole process work better and produce better results. One phase’s answers become the next phase’s material.

Why This Approach Wins

Chaining produces better outcomes because:

- Narrow Focus: Each step handles one specific thing rather than trying to solve an entire puzzle at once

- Catching Issues Early: If one step’s output isn’t very good, you can catch that before the next step makes it worse

- Being Clear: You can see the intermediate steps and understand how conclusions were reached

- Flexibility: You can improve one step without having to rebuild everything else

The Feedback Loop: How Agents Really Work

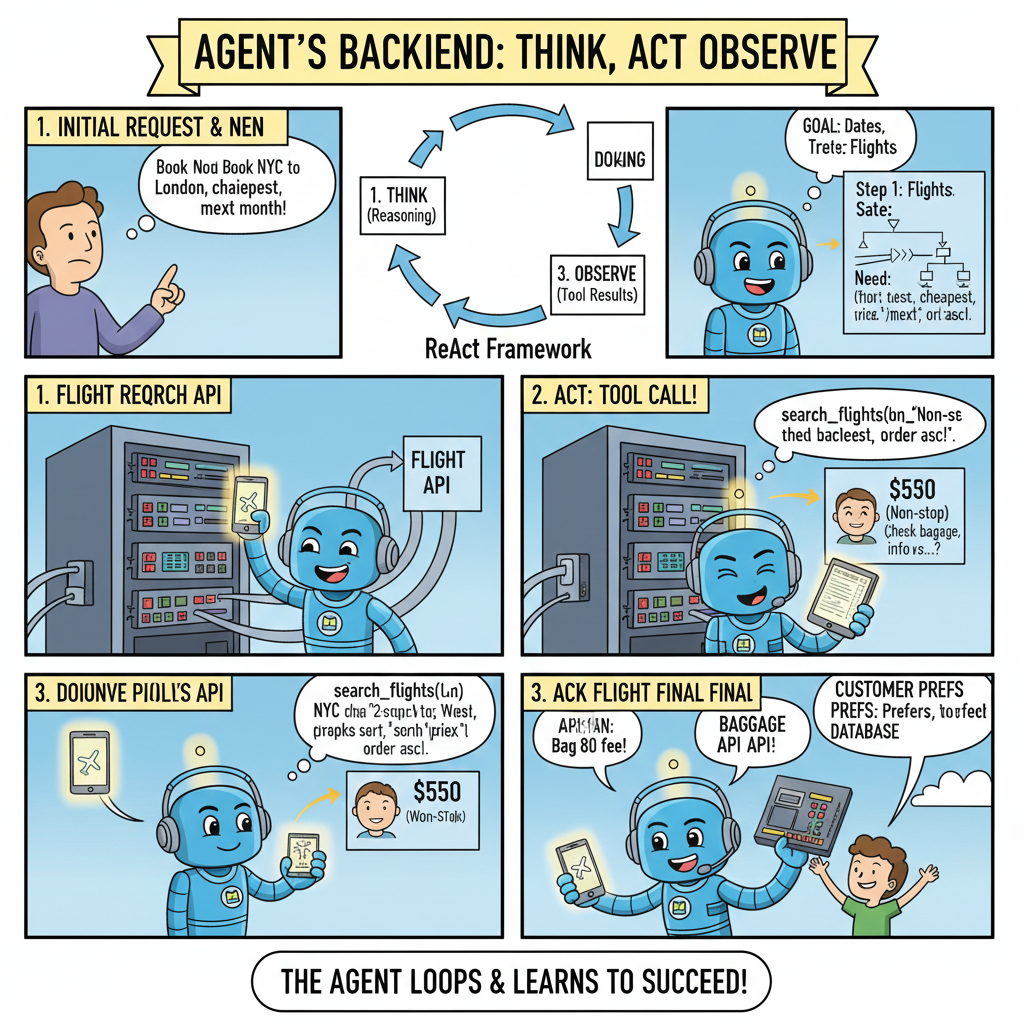

The actual magic happens when you combine reasoning, tools, and chains in a continuous loop.

The feedback loop is basically the cycle that agentic systems run through repeatedly:

- Starting Point: Agent gets a request or goal.

- Thinking (via the LLM): Agent reasons about what needs to happen and creates a plan.

- Choosing and Doing: Agent picks the right tools and executes them.

- Getting Results: Agent receives what the tools return.

- Storing and Learning: Agent saves what it learned and updates its situation understanding.

- What’s Next?: If the goal is done → send response to user; if not → loop back to thinking with the new information.

The loop keeps going until the agent reaches its goal or hits a stopping point.

Practical Walk-Through: Travel Planning

User says: “Book me a flight from New York to London next month, find the absolute cheapest option”

- Thinking: “I need to search for flights, look at prices, and complete the booking”

- Doing: Call the flight search tool with the right parameters (NYC → London, next month, sort by price, cheapest first)

- Results: Tool returns multiple flight options with prices and details

- Thinking: “I have options now, but I should check baggage rules and see if there are customer preferences. The absolute cheapest is $450 but has 2 stops and basically no baggage allowance”

- Doing: Check baggage policies and look at this customer’s profile for preferences

- Results: This customer usually prefers non-stop flights if the price difference is reasonable

- Doing: Book the non-stop flight at $530 that fits within the customer’s preferences

- Responding: “Done! Here’s your booking: [details]”

You can see how the agent isn’t just following a script. It observes information, makes judgments, adjusts its approach, and continues looping through the process until it actually accomplishes the goal.

Conclusion

This blog helps you understand what agentic AI is, how it differs from generative AI, and most importantly, how it actually works. But this is only the beginning. Real-world agentic systems are more sophisticated. They use advanced frameworks to manage reasoning. They maintain memory intelligently across conversations. When multiple agents operate together, they need orchestration, i.e. careful coordination, to work effectively at scale.

In Part 2 of this series, we’ll explore these advanced concepts. You’ll learn the ReAct framework that guides professional agent reasoning, discover how agents remember and maintain context, and understand orchestration patterns for coordinating multiple agents. We’ll also cover limitations. Agentic systems hallucinate. They struggle with incomplete information.