In the previous article, we saw how LLMs think and process information briefly. In this article we dive deeper into the architecture behind LLMs, namely Transformers.

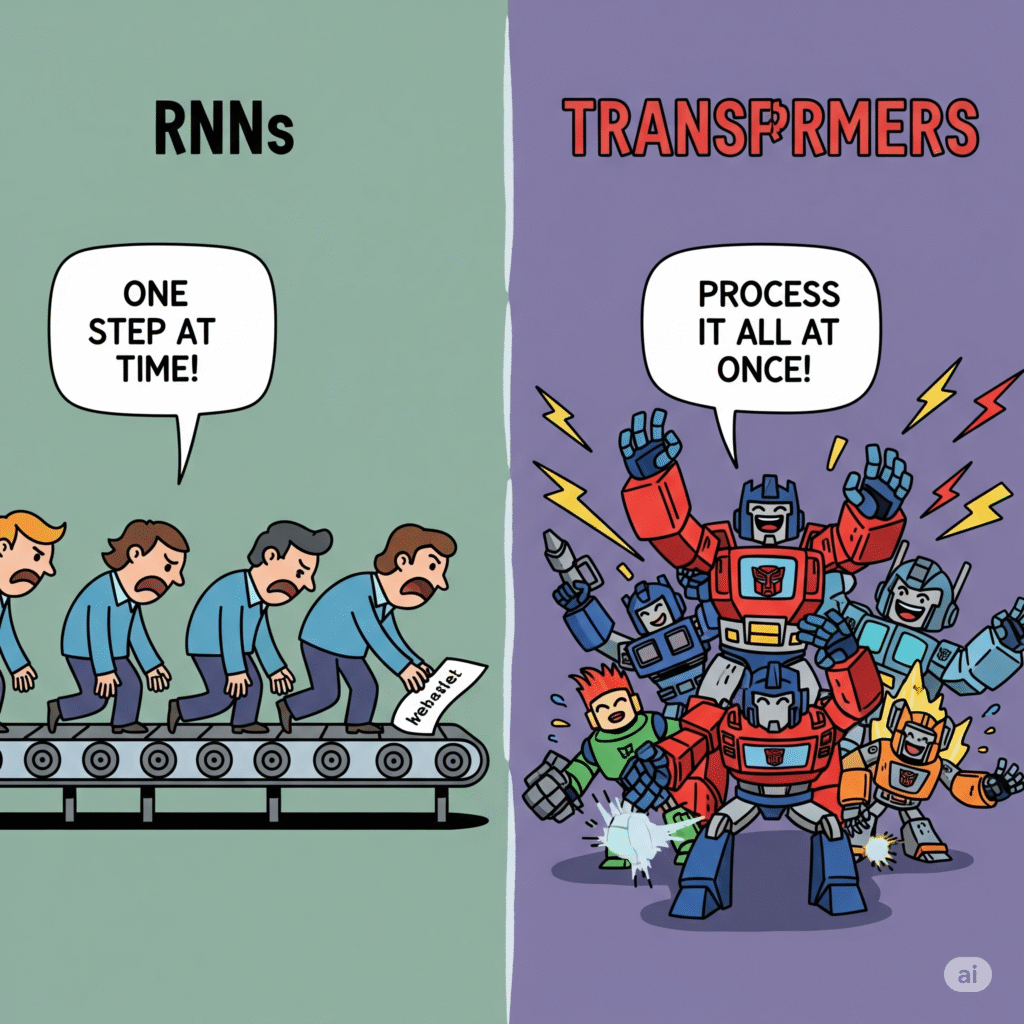

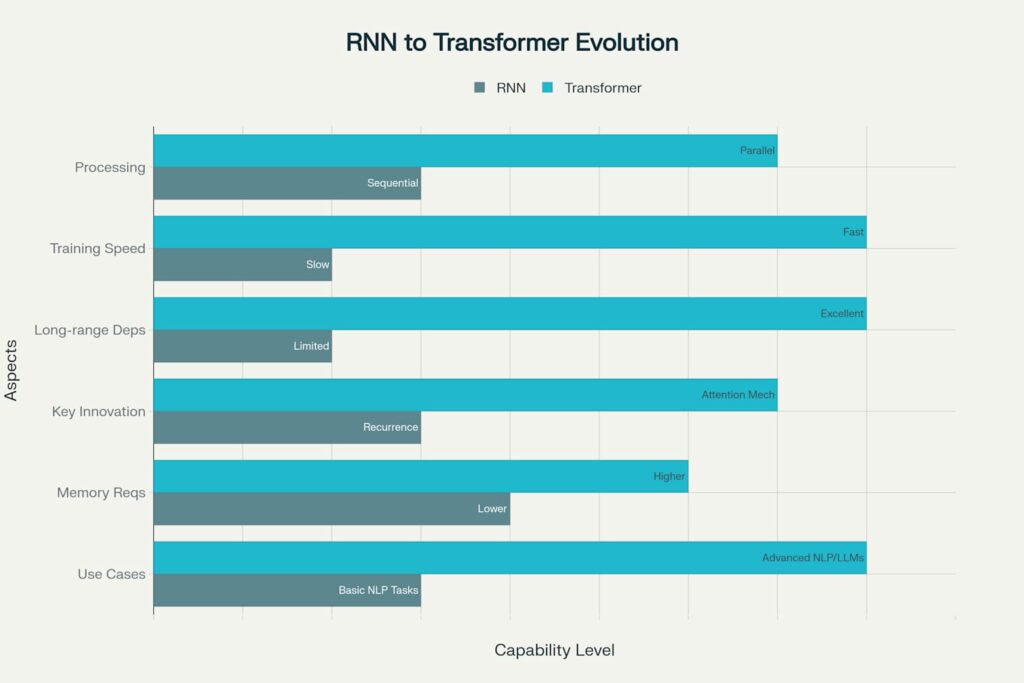

Transformers were introduced first in 2017, in the famous paper “Attention is All You Need”. Do checkout the paper after reading this article if you wanna understand where it all started. Before transformers, text was processed word by word in a sequential manner which had many drawbacks. With the advent of transformers, entire text sequences could be processed simultaneously, while maintaining an understanding of how each word relates to every other word in the context.

A Step-by-Step Journey Through Text Processing

Imagine you’re trying to understand how a transformer reads and processes text, just like how you might read a sentence and understand its meaning. Let me walk you through this fascinating journey step by step, without getting lost in complex technical details.

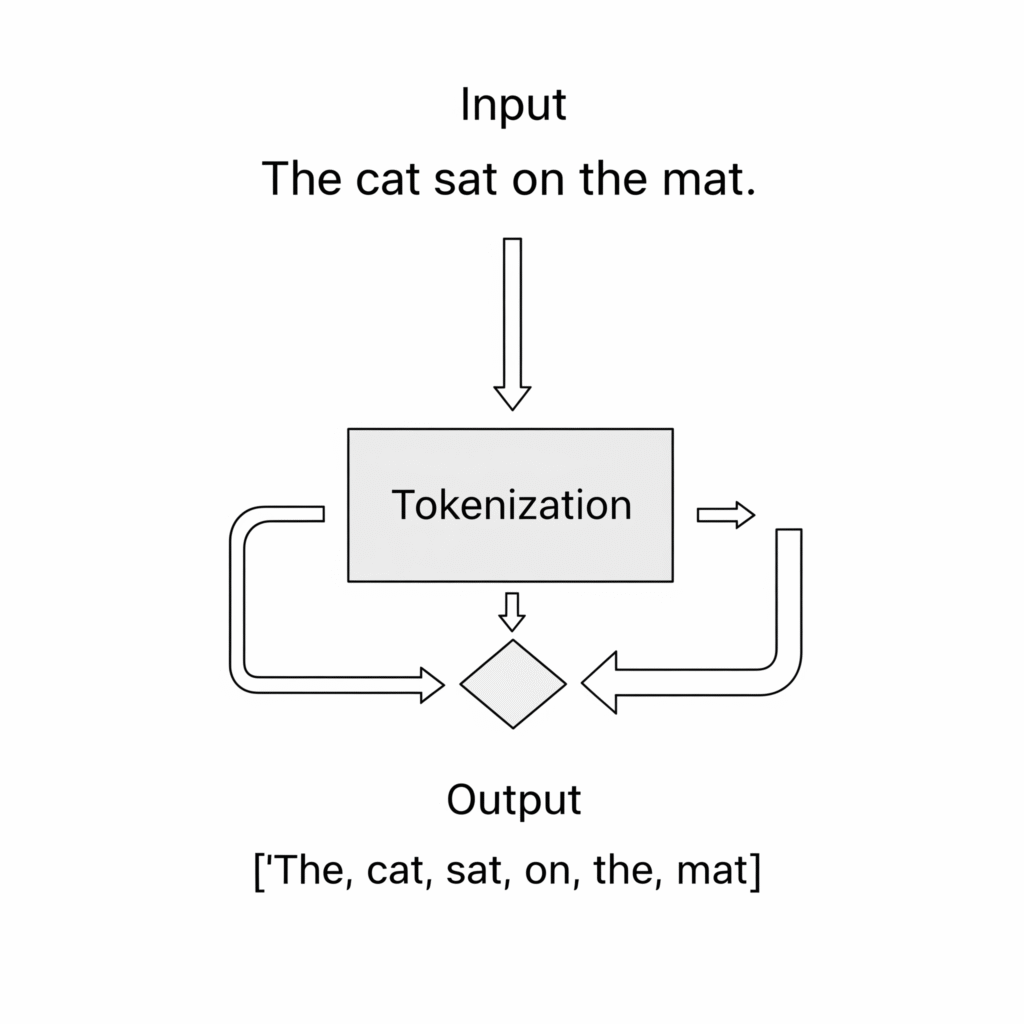

Step 1: Breaking Down the Text Into Digestible Pieces

When you give a transformer some text to work with, the first thing it does is break that text down into smaller, manageable pieces called tokens. For example, if you input the sentence “The cat sat on the mat,” the transformer splits this into individual tokens: [“The”, “cat”, “sat”, “on”, “the”, “mat”].

This process is called tokenization. It is crucial because transformers can’t work with raw text directly. Sometimes, these tokens might be whole words, but they can also be parts of words or even individual characters, depending on how the model was designed. The key point here is that the transformer needs to have a consistent way to handle any text you throw at it.

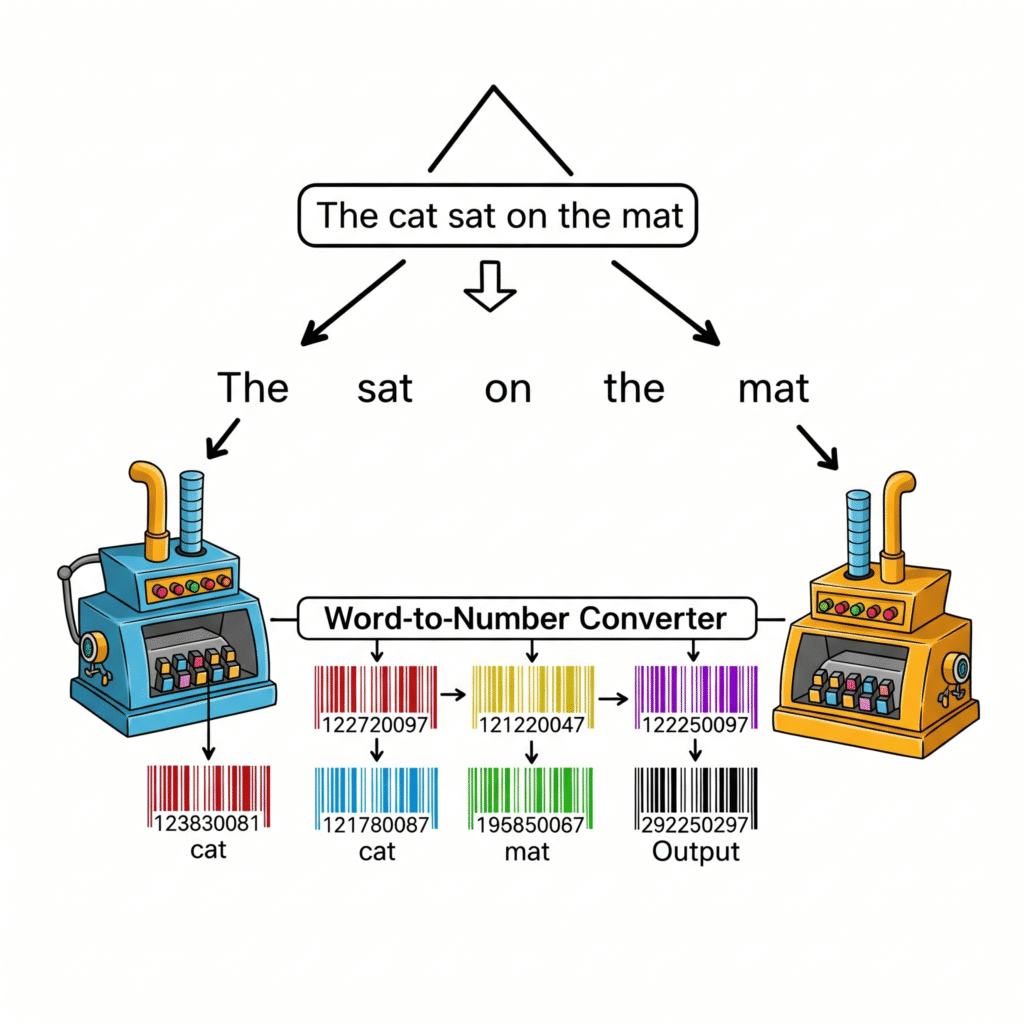

Step 2: Converting Words Into Numbers the Computer Can Understand

Once the text is broken into tokens, each token gets converted into a long list of numbers called an embedding. Think of this as giving each word a unique mathematical fingerprint that captures its meaning. For instance, words with similar meanings like “happy” and “joyful” would have similar number patterns, while completely different words like “cat” and “mathematics” would have very different patterns.

These embeddings are typically 512 numbers long in many transformer models, creating a rich mathematical representation of each word’s meaning. The beautiful thing about this process is that the transformer learns these number representations during training, so words that are used in similar contexts end up with similar mathematical fingerprints.

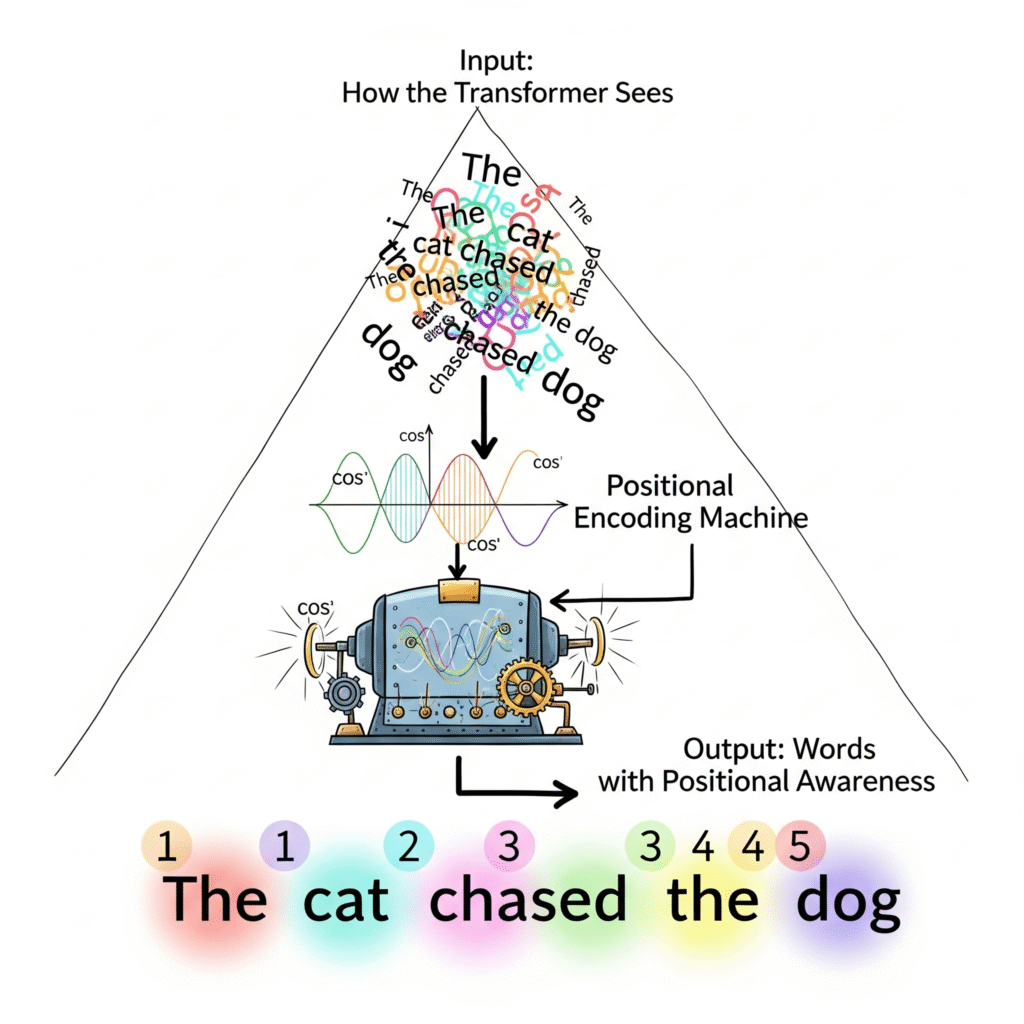

Step 3: Adding Position Information — Because Order Matters

Here’s where transformers face an interesting challenge. Unlike humans who naturally read from left to right and understand that word order matters, transformers initially see all the tokens at once without knowing which comes first. To solve this, they add special positional information to each token’s embedding.

Think of this like adding timestamps to each word . “The” gets a marker saying “I’m position 1,” “cat” gets “I’m position 2,” and so on. This positional encoding uses mathematical functions based on sine and cosine waves to create unique position signatures. This way, the transformer knows that “The cat chased the dog” means something different from “The dog chased the cat.”

Step 4: The Attention Mechanism — Where the Magic Happens!

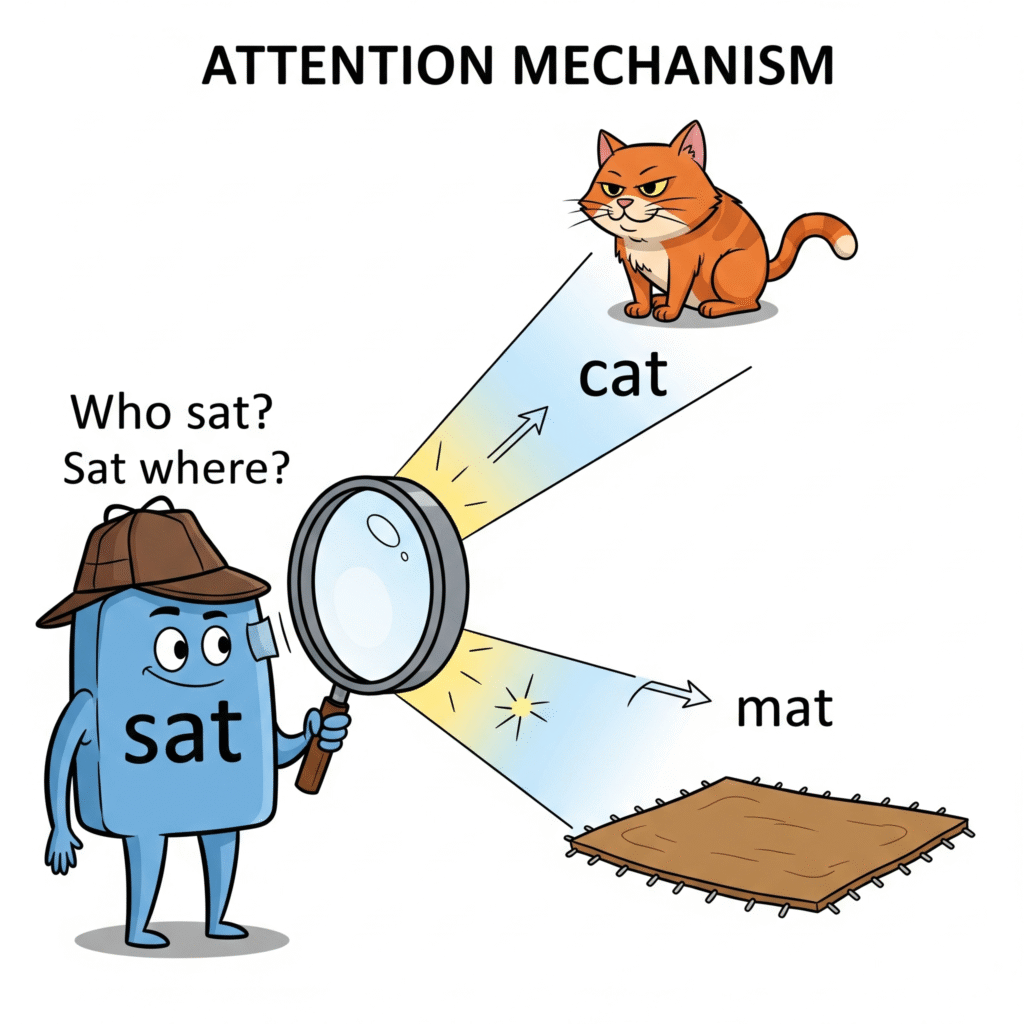

Now comes the most fascinating part of how transformers work: the attention mechanism! Imagine you’re reading a sentence and trying to understand what each word means in context. You naturally look at surrounding words to get the full picture. That’s exactly what the attention mechanism does, but in a much more sophisticated way.

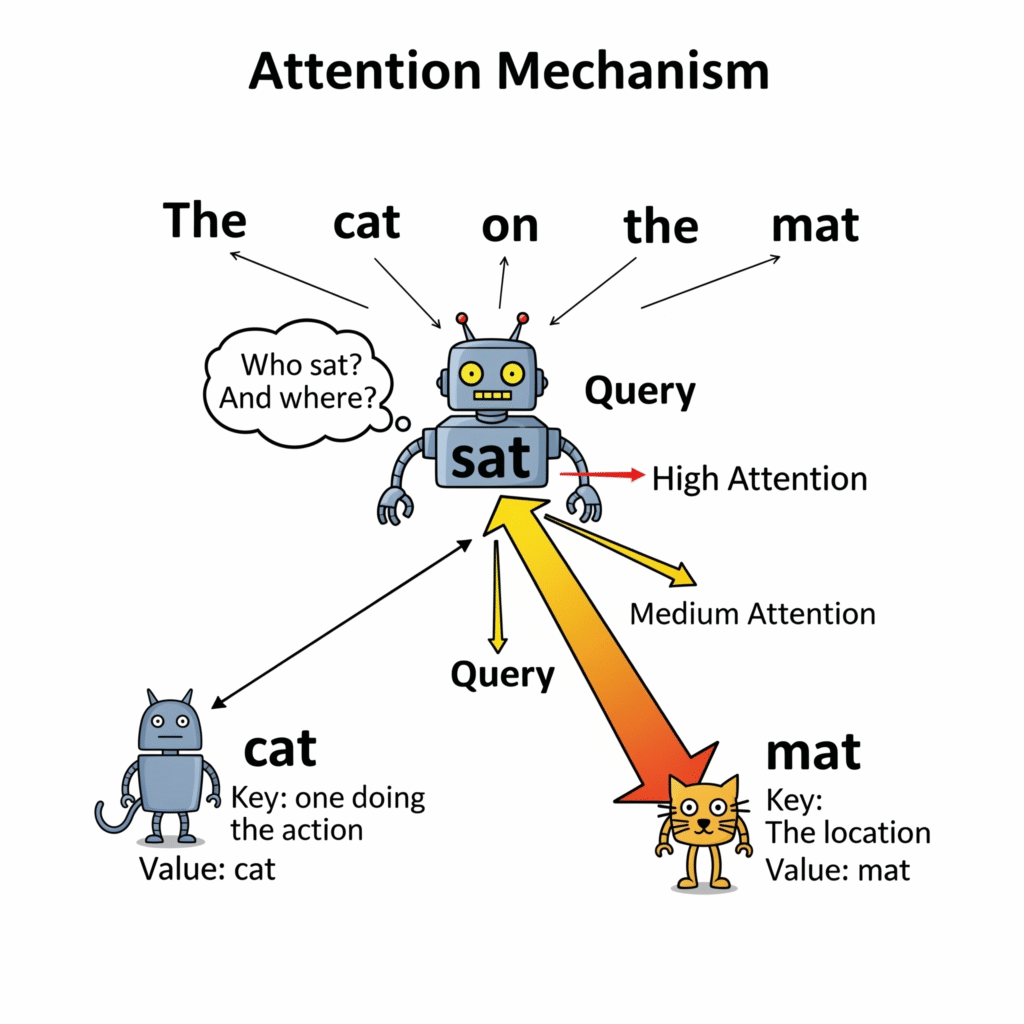

When processing each word, the transformer asks three important questions through what are called Query, Key, and Value vectors. Think of it like this: for every word, the Query asks “What should I pay attention to?”, the Key responds “I am this type of information,” and the Value provides “Here’s my actual content”. The transformer then calculates how much attention each word should pay to every other word in the sentence.

For example, when processing the word “sat” in “The cat sat on the mat,” the attention mechanism might determine that “sat” should pay high attention to “cat” (because that’s who’s doing the sitting) and moderate attention to “mat” (because that’s where the sitting happens). This happens simultaneously for every word in the sentence, creating a rich web of relationships.

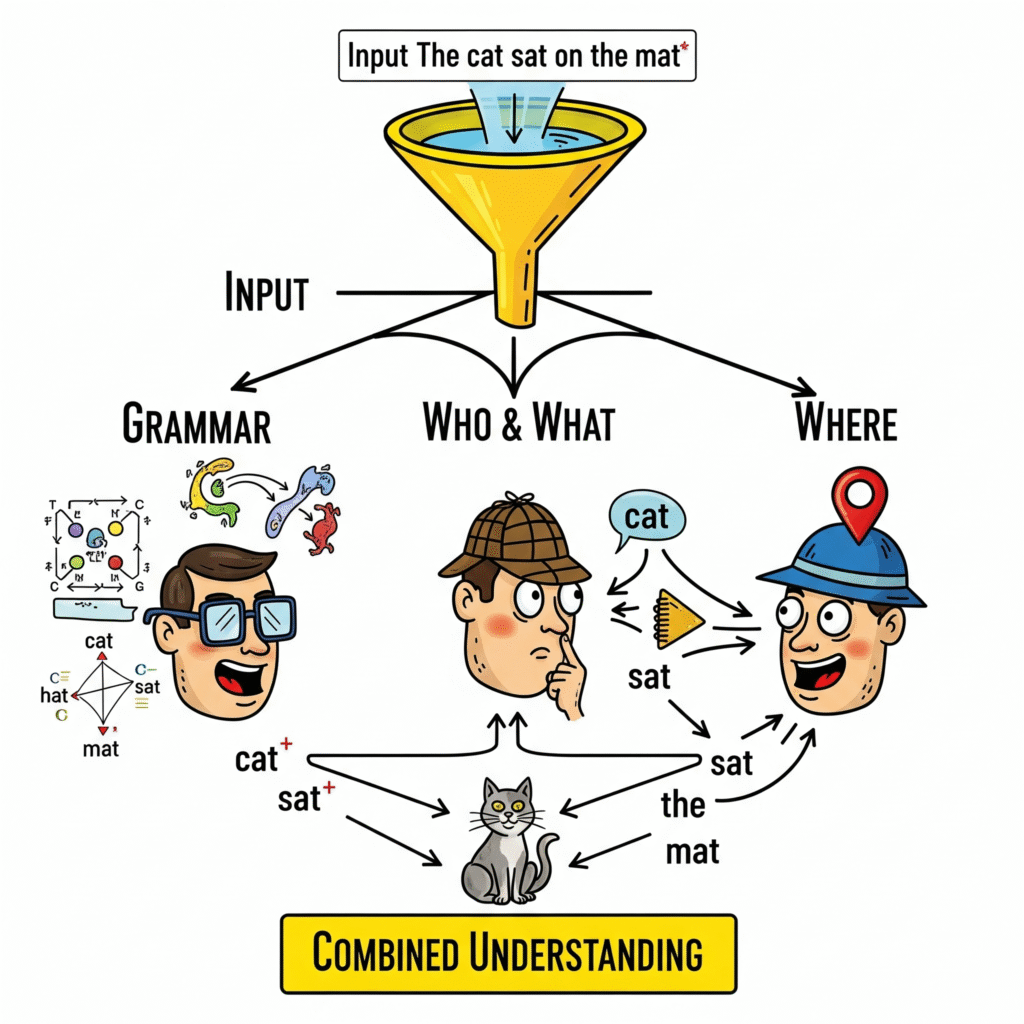

Step 5: Multi-Head Attention — Looking at Multiple Perspectives

To make this process even more powerful, transformers don’t just use one attention mechanism — they use several in parallel, called multi-head attention. Think of this like having multiple people read the same sentence, where each person focuses on different aspects. One “head” might focus on grammatical relationships, another on semantic meanings, and yet another on long-distance word connections.

All these different perspectives get combined to create a comprehensive understanding of how each word relates to all the others in the sentence. This is why transformers are so good at understanding context and nuance in language.

Step 6: Processing Through Multiple Layers

The transformer doesn’t stop after just one round of attention. Instead, it passes the processed information through multiple layers, each one refining and improving the understanding. Think of this like reading a complex paragraph multiple times, and each time through you pick up new details and connections you might have missed before.

Each layer builds upon the work of the previous layers, gradually developing a more sophisticated understanding of the text. A typical transformer might have 6, 12, or even more layers, with each layer adding its own insights to the overall comprehension process.

Step 7: Generating the Final Output

After all this processing, the transformer has developed a rich, contextual understanding of the input text. In the final step, this understanding gets converted into whatever output format is needed. For a language model like ChatGPT, this means predicting what word should come next. For a translation model, it means generating the equivalent sentence in another language.

The transformer looks at all the processed information and calculates probabilities for each possible next word in its vocabulary. It then selects the word with the highest probability as its prediction. This process can continue word by word to generate entire sentences, paragraphs, or even longer texts.

Real-World Applications: Transformers Everywhere

Understanding transformers is crucial because they form the foundation of virtually all modern Large Language Models. Whether we’re talking about GPT-4, Claude, or any other state-of-the-art language model, they’re all built on transformer architecture principles.

The impact of transformers extends far beyond just language models. Their versatility has led to applications across numerous domains, fundamentally changing how we approach artificial intelligence. In natural language processing, transformers power everything from search engines to chatbots, from translation services to content generation tools. Beyond text, transformers have been adapted for computer vision (Vision Transformers), audio processing (speech recognition and generation), code generation (GitHub Copilot), and even scientific applications like protein structure prediction. This versatility demonstrates the fundamental power of the attention mechanism and the transformer architecture.

Conclusion

Understanding transformers provides you with the foundation to comprehend virtually any modern AI system you encounter. Whether you’re interested in using these tools in your work, developing new applications, or simply understanding the technology that’s reshaping our world, the principles we’ve covered here will serve as your guide. I found this material particularly useful to understand the working of Transformers, do check it out link .

The journey from the sequential limitations of RNNs to the parallel power of transformers represents one of the most significant advances in artificial intelligence history. As you continue exploring the world of generative AI, remember that at the heart of these seemingly magical systems lies the elegant simplicity of attention — the ability to focus on what matters most, just as we do when we read, listen, and understand.

Hope you enjoyed reading the article. Be sure to give me a few claps if you found the article useful. In the next article we’ ll look at the different types of transformer architectures.