Welcome back to our comprehensive journey into the world of Large Language Model fine-tuning! If you’ve just joined me, I highly recommend starting with Part 1: Understanding LoRA and PEFT — The Ultimate Guide to LLM Fine-Tuning, where I laid the theoretical foundation for everything we’re about to explore today.

So you’ve got the theory down — now let’s talk about actually doing this stuff. Part 1 walked you through the concepts, but this time we’re getting into the nuts and bolts: the real decisions you’ll face, the headaches you’ll need to solve, and the tactics that actually work when you’re knee-deep in a fine-tuning project.

Here’s what we’re covering:

- Getting Your Data Ready: You’ll learn how to build instruction-response pairs that actually make sense, and how to make sure your dataset covers all the weird edge cases your model might run into.

- Picking the Right Approach: Should you go with LoRA? QLoRA? Full fine-tuning? I’ll break down how to decide based on what hardware you’ve got, how complex your task is, and what kind of performance you’re after.

- Dialing in the Settings: Learning rates, batch sizes, training epochs — these aren’t just random numbers. You’ll figure out how to pick values that get you solid results without wasting time or resources.

- Dealing with Problems: Two things will trip you up more than anything else: overfitting (when your model just memorizes stuff) and catastrophic forgetting (when it loses what it already knew). We’ll show you how to spot these issues early and fix them.

- Data Quality Matters: Turns out, a hundred really good examples beat a thousand mediocre ones. We’ll explain why that is and how to build datasets that actually move the needle.

- What’s Coming Next: The field keeps moving. We’ll touch on newer stuff like rsLoRA and DoRA, plus tools that are starting to automate parts of this process.

Unlike Part 1, this isn’t just a list of instructions. We’re going to talk about why certain choices work better in different situations, so you can think through your own projects instead of just copying what someone else did. Got a single GPU in your laptop? We’ve got you covered. Running this at scale across multiple machines? That too.

When you finish reading, you’ll know exactly how to take a general language model and turn it into something that does exactly what you need. Let’s get started.

Practical Implementation: From Theory to Practice

Let’s walk through what implementing fine-tuning actually looks like in practice.

Dataset Preparation: The Foundation of Success

The quality of your fine-tuning depends heavily on the quality of your dataset. For instruction tuning, your data should consist of instruction-response pairs that demonstrate the desired behaviour. Here’s what effective data looks like:

Instruction: "Summarize this medical report in layman's terms."

Input: [Technical medical report]

Output: [Clear, simple summary]The key is ensuring your dataset represents the full range of scenarios your model will encounter. For a customer service bot, this means including various types of inquiries, complaint styles, and resolution paths. For a medical application, it means covering different conditions, symptoms, and treatment discussions.

Choosing the Right Method

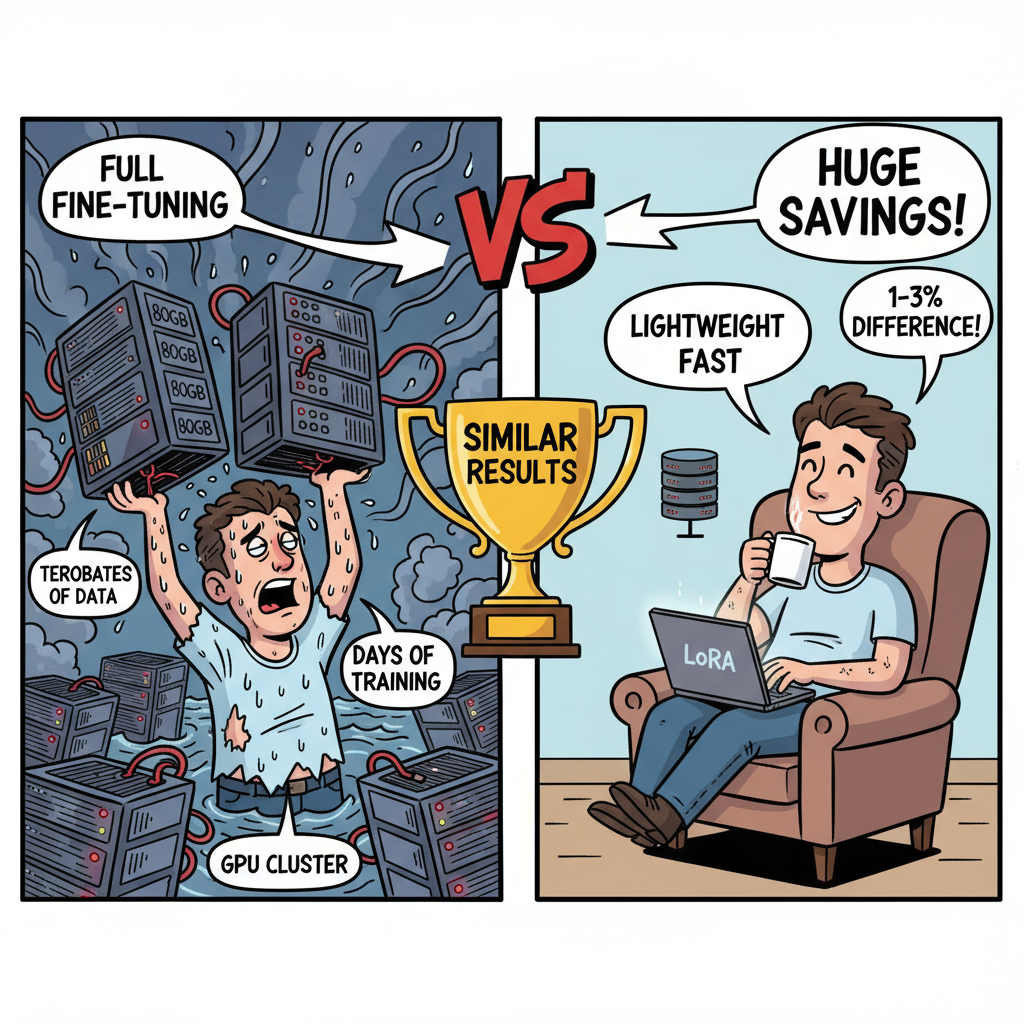

The choice between full fine-tuning and PEFT methods depends on several factors:

Resource Availability: If you have limited GPU memory (less than 24GB), QLoRA becomes essential for fine-tuning models larger than 7B parameters. With high-end GPUs (80GB+), you might consider LoRA or even full fine-tuning for smaller models.

Task Complexity: Simple style adaptations work well with low-rank LoRA (rank 4–8), while complex domain shifts benefit from higher ranks (64–256).

Performance Requirements: If you need absolute maximum performance and have the resources, full fine-tuning still edges out PEFT methods by 1–3%. However, for most practical applications, this small difference isn’t worth the 100x increase in computational cost.

Hyperparameter Optimization

Successful fine-tuning requires careful hyperparameter selection:

Learning Rate: Start with 1e-4 to 5e-5 for LoRA. The learning rate should be lower than what you’d use for training from scratch, as we’re making subtle adjustments rather than dramatic changes.

Batch Size: Balance between GPU memory and training stability. Larger batches (32–64) provide more stable gradients but require more memory.

Training Epochs: Most fine-tuning tasks converge within 3–10 epochs. Monitor validation loss closely; if it starts increasing while training loss decreases, you’re overfitting.

Overcoming Common Challenges

Fine-tuning isn’t without its pitfalls. Understanding these challenges helps you navigate them successfully.

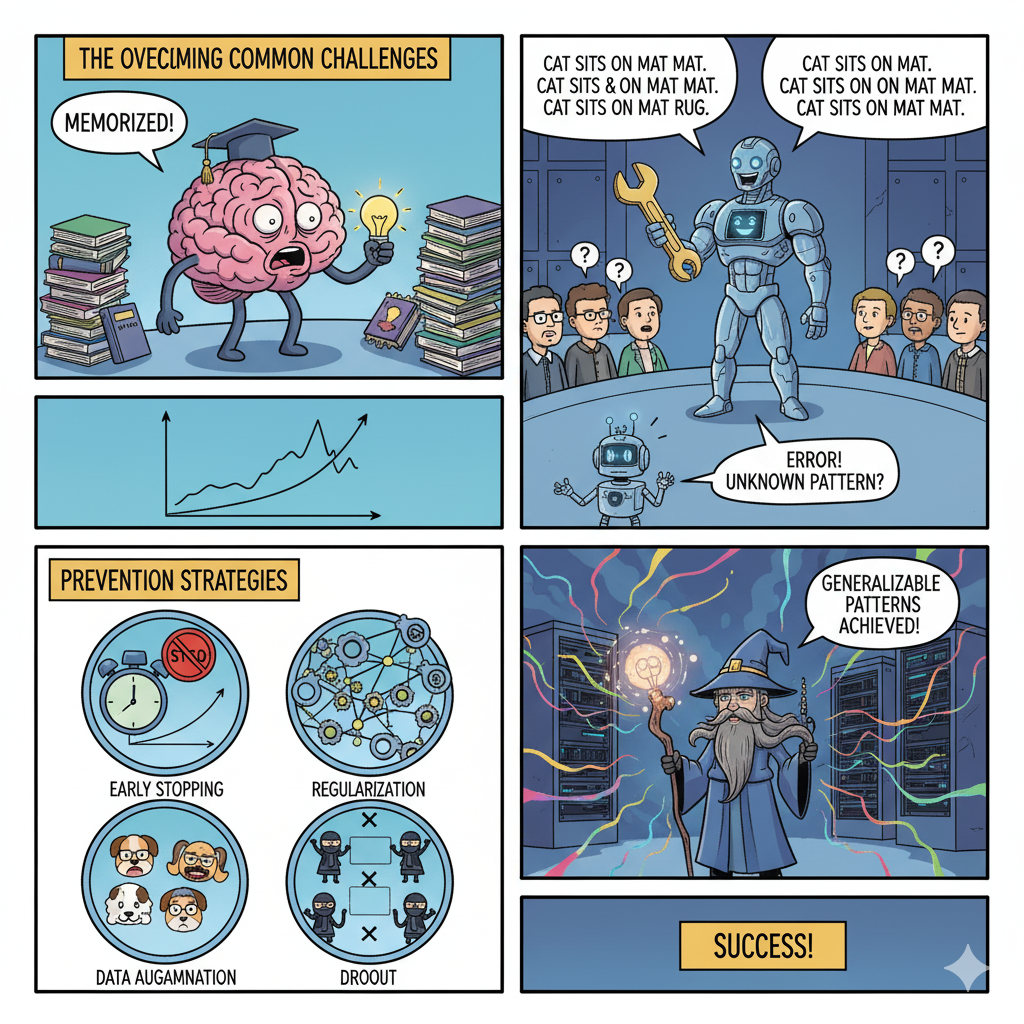

The Overfitting Trap

Overfitting occurs when your model memorises the training data instead of learning generalizable patterns. It’s like a student who memorises exam answers without understanding the concepts; they’ll ace that specific test but fail when questions are rephrased.

Signs of overfitting include:

- Training accuracy is near 100% while validation accuracy plateaus or decreases

- The model generates repetitive or overly specific responses.

- Performance degrades on examples slightly different from the training data

Prevention strategies include:

- Early Stopping: Halt training when validation performance stops improving

- Regularization: Add penalties for complex adaptations

- Dropout: Randomly disable neurons during training to prevent over-reliance on specific patterns

- Data Augmentation: Create variations of your training examples to improve generalization

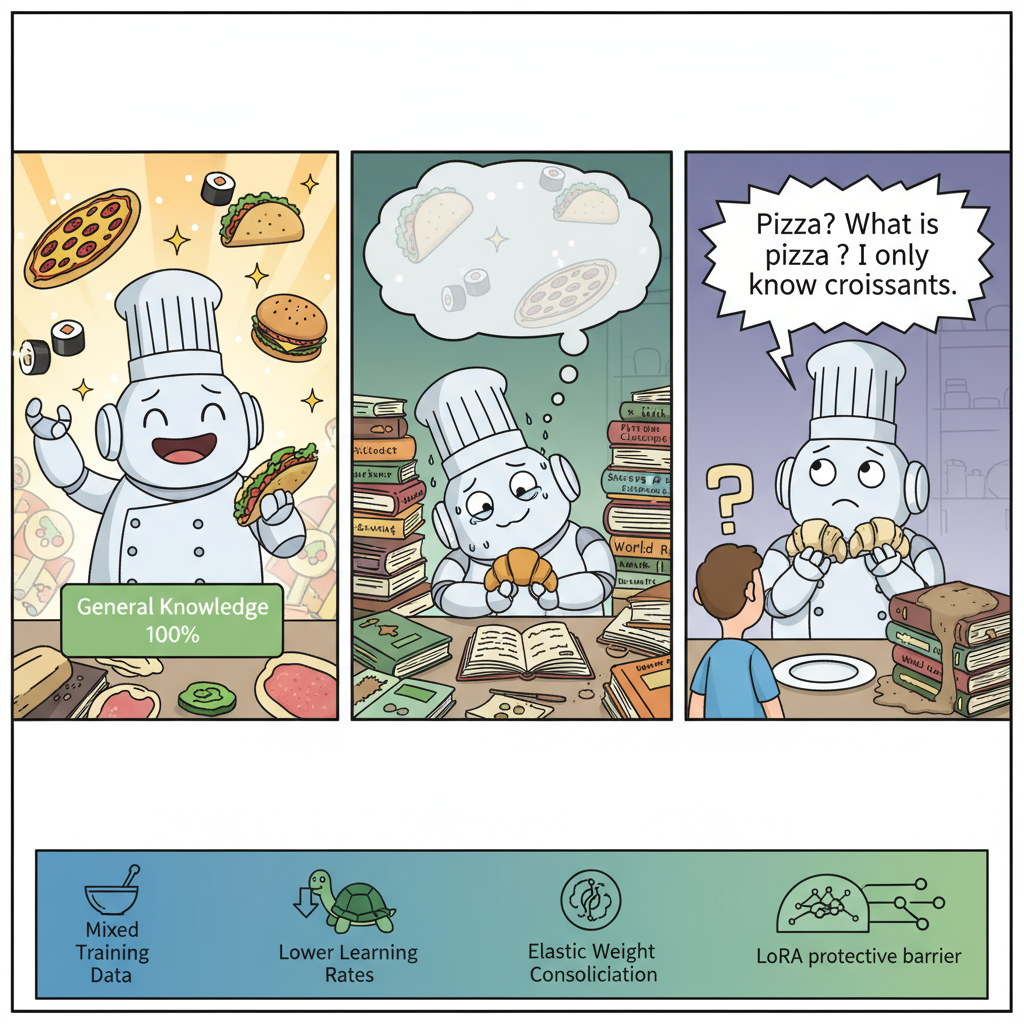

Catastrophic Forgetting: The Memory Challenge

One of the most significant challenges in fine-tuning is catastrophic forgetting, when the model loses its general capabilities while learning new tasks.

Imagine learning a new language so intensively that you start forgetting your native tongue. That’s catastrophic forgetting in neural networks. The model becomes so specialised that it loses the broad understanding that made it valuable in the first place.

Research shows this problem intensifies with model size. Ironically, larger models that start with better general capabilities experience more severe forgetting during fine-tuning. It’s like how a master chef might struggle more than a home cook when forced to completely change their cooking style.

Solutions to catastrophic forgetting include:

- Mixed Training Data: Include general examples alongside task-specific data

- Lower Learning Rates: Make gentler adjustments to preserve existing knowledge

- Elastic Weight Consolidation: Identify and protect parameters crucial for general tasks

- LoRA/PEFT Methods: By updating fewer parameters, these methods inherently reduce forgetting

Dataset Quality and Quantity Trade-offs

Not all data is created equal. A smaller dataset of high-quality, diverse examples often outperforms a larger dataset with repetitive or noisy samples.

Consider quality indicators:

- Diversity: Does your dataset cover edge cases and variations?

- Accuracy: Are the labels and responses correct?

- Relevance: Does each example contribute something unique?

- Balance: Are all categories or scenarios adequately represented?

Future Directions and Emerging Techniques

The field of efficient fine-tuning continues evolving rapidly.

Advanced LoRA Variants

New research introduces improvements like:

- rsLoRA: Rank-stabilized LoRA that better preserves model stability

- DoRA: Weight-decomposed adaptation offering better performance

- LoFT: LoRA that mimics full fine-tuning behavior

Multi-Task and Modular Approaches

The future points toward modular AI systems where multiple LoRA adapters can be combined dynamically. Imagine a model that seamlessly switches between medical, legal, and financial expertise based on context, all using the same base model with swappable adapters.

Automated Fine-Tuning

Emerging tools automate the selection of hyperparameters and method choice. These systems analyse your task and data, automatically selecting between LoRA, QLoRA, or other methods while optimising parameters for best performance.

Conclusion

Fine-tuning large language models has evolved from a resource-intensive process accessible only to tech giants into a practical tool available to organizations of all sizes. Through innovations like LoRA and QLoRA, we’ve witnessed a paradigm shift where adapting multi-billion parameter models no longer requires supercomputer-level resources.

The journey from understanding basic fine-tuning concepts to implementing production-ready systems might seem daunting, but remember: every expert was once a beginner. Start small, experiment with proven techniques like LoRA, and gradually build your expertise. The democratization of AI customization means that innovative applications are no longer limited by computational resources but only by imagination and determination.

A Few Key Points to Keep in Mind

- PEFT methods like LoRA offer 95%+ of full fine-tuning performance at 1% of the cost

- Quality data matters more than quantity — focus on diverse, relevant examples.

- Start with established configurations (rank=8, alpha=16) and adjust based on results.

- Monitor for overfitting and catastrophic forgetting — they’re common but preventable.

- Consider QLoRA for large models when GPU memory is limited.

The era of accessible AI customization has arrived. Whether you’re building specialized medical assistants, financial advisors, or customer service systems, the tools and techniques covered here provide the foundation for transforming general-purpose language models into powerful, domain-specific solutions. The only question remaining is: what will you build?